Vercel Releases Agent Skills

Vercel has quietly — but meaningfully — lowered a new stone in the foundation of the agentic web: Agent Skills, a package-like collection of reusable “skills” that AI coding agents can install and call during development workflows. Instead of asking an LLM to invent best practices on the fly, teams can now grant agents structured, versioned, production-grade playbooks (for example, React and Next.js performance rules) that the agent can reference, test against a codebase, and use to propose grounded fixes. This moves agent assistance from ad-hoc advice toward provable, repeatable automation.

What are Agent Skills?

Agent Skills are packaged rulebooks, checks, utilities, and small programs formatted so that compatible AI agents (coding assistants such as Codex, Claude Code, Cursor, OpenCode and others) can discover, install, and use them during normal coding flows. Think of an Agent Skill as a tiny, purpose-built library of expertise — e.g., “React best practices,” “web interface guidelines,” or “claimable Vercel deployments” — that an agent can call when reviewing code, authoring PRs, or suggesting optimizations. The skills are distributed and installed via a simple developer experience that feels familiar to JavaScript developers: a single command (for example npx add-skill vercel-labs/agent-skills) fetches the skill and makes it available to the agent.

At its core, a skill contains:

-

Guidelines and rules (human-authored best practices, example fixes).

-

Tests or checks an agent can run to validate whether a rule applies to a codebase.

-

Action templates: suggested diffs, configuration changes, or deployment commands the agent can propose or execute (subject to human approval).

-

Metadata describing compatibility, versioning, and provenance (important for trust and governance).

Why this matters (short version)

AI coding assistants are extremely powerful — but also inconsistent. A lot of their errors come from two sources: hallucinated “fixes” and lack of repository-specific grounding. By giving agents a curated, versioned source of truth for domain knowledge (for example, 40+ React and Next.js performance rules compiled from Vercel’s engineering experience), Agent Skills reduce hallucination, increase repeatability, and make agent suggestions auditable and testable. This is a crucial step toward production-safe agent automation.

How Agent Skills work in practice

Install & discover

A developer adds a skill to their project (or to their coding agent) via a single command. That skill is then discoverable by compatible agents during typical coding interactions — code reviews, PR generation, or interactive prompts.

Example (canonically documented pattern):

Once installed, the agent can run a local checklist from the repository: scan components, flag problematic patterns (like heavy client-side imports or cascading useEffect chains), and either propose diffs or open an issue/PR with a recommended change.

Run-time behavior

When the agent is evaluating code, it consults installed skills to:

-

Detect whether a rule applies (static checks, simple heuristics, or tests).

-

Explain why a pattern is problematic (benefit: human-understandable reasoning grounded in a documented rule).

-

Propose or generate a safe fix (small diffs, configuration changes).

-

Validate the fix when possible (unit tests, lint passes, or lightweight performance checks).

This interaction makes agent output much more actionable and less speculative.

Example skill: react-best-practices

Vercel packaged a “React best practices” repository for agents that contains 40+ prioritized rules across categories like bundle size, data fetching, hydration, and render timing. When a coding agent with that skill inspects a Next.js page, it can point to a particular rule, give the failing example, and propose a tested change — not just an ungrounded suggestion.

How it fits into Vercel’s agent and AI SDK strategy

Agent Skills do not live in isolation. They are a practical complement to Vercel’s broader agentic tooling:

-

Vercel Agent — Vercel’s integrated agent for codebases — benefits from skills as they become a consistent source of company-level or framework-level best practice. The agent can propose validated improvements rather than generic suggestions.

-

AI SDK advances — Vercel’s AI SDK (recently updated through several major iterations) introduced agent tooling, tool-calling, and structured outputs. Agent Skills slot into that SDK ecosystem as discoverable toolkits agents can call to improve confidence and reduce risk when making code changes.

This layering — SDK for running agents, skills for domain knowledge — creates a modular stack where teams can curate internal skills (company conventions, security rules) and combine them with community skills (Vercel’s React rules) to build trusted agent workflows.

Developer experience: quick, familiar, and auditable

Vercel designed the experience to feel familiar to web developers:

-

Single-command installation — mirrors npm/yarn ergonomics.

-

Repo-local skills — skills installed into a repository can be reviewed and versioned alongside code.

-

Human-in-the-loop — agents typically propose changes; human review is still the default approval step.

-

Traceability — each suggestion references a skill and a specific rule, making suggestions auditable.

For teams that have worried about “agent drift” (agents gradually producing incompatible or insecure changes), this model gives a governance story: codified rules, versioned skills, and human approvals. A skill release cycle also allows Vercel or internal teams to push updates (e.g., new rules or revised advice) and have agents pick them up deterministically.

Enterprise & security implications

Agent Skills help address core enterprise concerns:

-

Compliance and consistency: company-specific coding standards or security checks can be packaged as private skills and enforced automatically by agents during PR creation.

-

Provenance: skills include metadata about their origin and version so reviewers can see who authored a rule and when it changed.

-

Least privilege & control: agencies can restrict which skills an agent can access and whether the agent can auto-commit changes or only open PRs for human review.

Because skills are essentially code (with structured rules and tests), they can be integrated into existing CI/CD, security scanning, and SSO controls — making them easier to manage inside regulated environments.

The ecosystem effect: skills as the “npm” for agents

Several observers have framed Agent Skills as “npm for AI agents” or an open registry of capabilities agents can consume. That’s a useful mental model: rather than training agents each time or embedding vast amounts of framework knowledge into a single model, the community can produce small, high-quality skill packages that agents compose at runtime. The result should be faster iteration, easier governance, and less duplication of expert knowledge across teams and agents.

This model also enables:

-

Skill marketplaces: teams could publish private or public skills (UI guidelines, accessibility checks, cloud deployment best-practices).

-

Specialization: small vendors or internal teams can publish very focused skills (e.g., “React Native bundling checks” or “Vercel claimable deployments”).

-

Composability: agents can combine multiple skills when tackling complex tasks (for example: security skill + performance skill + company style guide).

Early examples and signals

Since the release, multiple skill packages and guides have appeared:

-

Vercel’s react-best-practices repository, compiling 40+ React/Next.js rules for agent consumption.

-

A “web interface guidelines” skill that installs as an agent command to check UI accessibility, keyboard support, and animations. Developers can add it with a short install script and run a

/web-interface-guidelinesagent command. -

Community posts and explainers highlight the simple install experience and immediate benefit for coding agents that were otherwise prone to outputting high-latency or heavy client bundles.

These early signals show a mix of Vercel-published skills and third-party guides that help teams adapt agent workflows quickly.

Real-world impact: faster, safer fixes — and new roles

Vercel’s broader agent push (including internal agent experiments) already suggests real operational impacts: automating repeatable selection and remediation tasks, reducing menial review work, and allowing humans to shift to higher-value tasks. Agent Skills accelerate this by giving agents vetted expertise they can act on reliably. Industry reporting about Vercel’s internal agent experiments shows the company using agentic workflows for sales and other operations — hinting that coding agents with curated skills could similarly reduce repetitive developer tasks while keeping humans in the loop.

This technical shift also creates organizational change:

-

Agent managers — engineers who design, version, and govern skills.

-

Skill authors — domain experts who translate best practices into testable rules.

-

Agent policy & audit teams — to ensure skills adhere to compliance and security standards.

Limitations and open questions

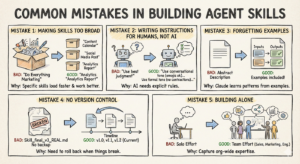

Agent Skills are a meaningful step, but they do not solve every problem:

-

Compatibility: agents must support the Agent Skills spec to use them. Adoption by major agent vendors will determine how universal the experience becomes.

-

Complex fixes: skills are best for rule-based checks and small diffs. Larger architectural changes or feature-level design still need human architects.

-

Maintenance burden: skills become another artifact to maintain. Teams need processes to update, test, and deprecate rules — especially as frameworks evolve.

-

Security of third-party skills: pulling code/rules into CI raises supply-chain concerns. Enterprises will want private registries and vetting before trusting external skills.

Despite these caveats, the model is promising because it aligns with how engineering organizations already manage knowledge: codified, versioned playbooks integrated into automation.

What to try today (practical checklist)

If you’re a developer or engineering lead curious to pilot Agent Skills, a short ramp-up plan:

-

Inventory: identify repeatable checks your team performs during reviews (perf, accessibility, linting).

-

Adopt a public skill: install a trusted Vercel skill (for instance,

react-best-practices) into a fork or staging repo and run it in agent review mode. -

Author a private skill: turn one internal rule (naming conventions, security header enforcement) into a skill and test it locally with your coding agent.

-

Integrate with CI: wire skill outputs into PR checks so suggested fixes are visible in your normal workflow.

-

Govern: create an approval path for skill updates (reviewer + automated test suite) to avoid surprise changes.

The future: a composable, verifiable agent web

Agent Skills point to a future where the web is not just static pages and APIs — it’s a composition of specialized agents operating with curated skills. When agents can reliably call small, auditable packages of domain knowledge, they become safer and more trustworthy tools for shipping production code. The skill/agent model promises a win-win: teams get faster suggestions and partially automated fixes, while organizations retain control and traceability through versioned skills and human approvals.

Vercel’s release is an early but meaningful step in that direction: packaging a decade of React and Next.js advice into agent-ready formats, publishing installable skills, and tying those capabilities into its agent ecosystem and AI SDK. If the community rallies around open, auditable skill registries and vendors build compatible agents, we’ll see steady progress toward agentic tooling that’s both powerful and predictable.

Conclusion

Vercel’s Agent Skills are more than a marketing phrase — they’re a pragmatic architecture for making AI coding assistants reliable, auditable, and composable. By packaging expertise (performance playbooks, UI guidelines, deployment flows) into discoverable, installable skills, Vercel is helping bridge the trust gap between speculative LLM advice and production-safe automation. For teams building with React, Next.js, or operating on Vercel, skill-driven agents offer an immediate productivity win; for the broader ecosystem, they hint at a new center of gravity: small, verifiable modules of expertise that agents can assemble to solve real engineering problems.

For quick updates, follow our whatsapp –https://whatsapp.com/channel/0029VbAabEC11ulGy0ZwRi3j

https://bitsofall.com/google-ai-ucp-agentic-commerce/

https://bitsofall.com/deepseek-ai-researchers-introduce-engram/

Nous Research Releases NousCoder-14B: an open, fast-trained champion for competitive programming