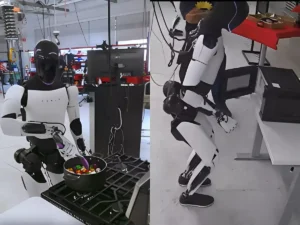

Updates to Tesla Optimus: Improved Outdoor Autonomy and Dexterity

Tesla’s humanoid robot, Optimus, has been evolving in a very “Tesla-like” way: fast iteration, highly shareable demos, and a strong emphasis on neural-network-driven autonomy rather than slow, scripted robotics. Two themes have stood out in recent Optimus updates and demonstrations:

-

Better outdoor autonomy—especially walking on uneven, real-world terrain with less reliance on teleoperation. TESLARATI+1

-

Better dexterity—hands, sensing, and manipulation improving from “demo-friendly” tasks toward practical, repeatable work. Electronic Specifier+1

This article breaks down what Tesla showed, what likely changed under the hood, why these two upgrades matter more than flashy choreography, and what still has to be solved before Optimus becomes a reliable worker in factories—or eventually outside them.

Why outdoor autonomy and dexterity are the two “make-or-break” capabilities

Humanoid robots look human-shaped, but they don’t get “human-level usefulness” automatically. In practice, most useful work in the physical world comes down to two difficult problems:

1) Moving safely through messy environments

Factory floors are structured; sidewalks, slopes, gravel, mulch, wet ground, cluttered yards—those are not. Walking outdoors isn’t just “walking, but outside.” It’s constant micro-adjustments, uncertain friction, uneven contact, and surprises.

Tesla highlighted Optimus walking on graded, mulch-covered hills—exactly the kind of surface where traction and stability become unpredictable. TESLARATI+1

2) Manipulating objects reliably

A robot can walk perfectly and still be useless if it can’t grasp, reposition, and use tools without dropping them. Conversely, a robot with great hands but poor locomotion can’t reach work sites safely.

That’s why Optimus updates around hands + tactile sensing, and “real terrain walking,” are more significant than any single PR-friendly trick. Tesla itself frames Optimus as a general-purpose, bipedal, autonomous humanoid requiring stacks for balance, navigation, perception, and interaction—that last word (“interaction”) is basically dexterity at scale. Tesla

What Tesla showed: Outdoor walking that’s closer to real autonomy

One of the most discussed Optimus moments came from a video shared by Tesla and amplified by Elon Musk and Tesla’s Optimus engineering leadership: Optimus walking up and down mulch-covered hills (graded, variable ground). TESLARATI+1

The key claim: limb control via neural nets, not teleoperation

According to reporting that summarized Musk’s commentary, Optimus was walking on highly variable ground using neural networks to control each limb, rather than being remotely “puppeteered.” TESLARATI+1

That distinction matters. Teleoperation can make a robot look capable for a clip. Neural control—especially on unstable terrain—means Tesla is pushing toward a system that can generalize across conditions and improve via training.

“Blindfolded” walking and why that’s both impressive and a warning sign

Additional context reported from Tesla’s Optimus leadership indicated the robot was “technically not able to see” during those terrain-walking runs—i.e., balancing without using video. TESLARATI+1

On one hand, it’s impressive: stabilizing on rough ground without a full visual control loop suggests strong proprioception (joint sensing) and robust balance policies.

On the other hand, it’s also a reminder that the system still has clear next steps. In the same coverage, Tesla’s team pointed to plans like:

-

adding vision for better planning ahead,

-

making the gait more natural on rough terrain,

-

improving responsiveness to velocity/direction commands,

-

and learning how to fall to minimize damage and stand back up. TESLARATI+1

If you read between the lines, Tesla is essentially describing the “full outdoor autonomy checklist” for humanoids:

-

perception (seeing obstacles early),

-

planning (choosing safe footholds),

-

control (executing stable steps),

-

recovery (handling slips and stumbles),

-

and durability (surviving inevitable falls).

What likely improved in Optimus to enable better outdoor autonomy

Tesla hasn’t published a full technical paper on Optimus’ locomotion stack, but the demonstrations and official positioning let us infer the main buckets of progress—without over-claiming specifics.

1) Better balance + contact handling

Uneven terrain exposes foot placement errors instantly. If Optimus can traverse graded mulch, it likely has improved:

-

joint torque control,

-

foot contact estimation,

-

and “disturbance rejection” (recovering when the ground behaves unexpectedly).

The public clips (and commentary around slipping/recovery) highlight that stability isn’t perfect—but the robot can continue moving after instability events, which is exactly the kind of progress you want to see. The Times of India+1

2) Faster, more reliable inference on embedded compute

To walk dynamically, the robot must run control policies at high frequency. Tesla’s AI & Robotics positioning emphasizes efficient inference hardware and autonomy at scale across vehicles and robots. Tesla

Even without exact numbers, it’s reasonable to treat Optimus as part of Tesla’s broader philosophy: push neural inference down onto efficient onboard compute, then iterate with data and training.

3) Training approach: from staged demos to “daily walks”

The framing of regular “walk” clips suggests a loop: run robots often, collect failure modes, retrain, deploy. That’s closer to how Tesla improves vehicle autonomy (ship → collect edge cases → retrain), even though robotics environments are physically harsher.

The dexterity upgrade: why hands matter more than faces or voices

Walking gets the headlines. Hands determine usefulness.

Tesla’s Gen 2 Optimus video coverage emphasized two manipulation-related upgrades:

-

advanced tactile sensing, and

-

improved hands/actuators enabling delicate handling (like picking up an egg). Electronic Specifier

Gen 2’s tactile sensing as a “real work” signal

A humanoid that can hold a fragile object without crushing it is showing something important: closed-loop control based on touch/force feedback, not just scripted motion.

Electronic Specifier’s coverage of Optimus Gen 2 specifically notes “advanced tactile sensing” and handling delicate objects like eggs, enabled by Tesla-designed actuators and sensors. Electronic Specifier

This is exactly the kind of capability that moves a robot from “cool demo” toward “can do repetitive light manufacturing steps, sorting, packing, and simple assembly support.”

Degrees of freedom: the path to tool use

In general robotics terms, the more independently controllable joints (degrees of freedom), the more nuanced the grasp and in-hand manipulation can be. In Tesla/Optimus discourse, hand DoF and actuator count frequently come up as shorthand for “dexterity potential.”

However, it’s important to separate:

-

what’s been demonstrated (e.g., tactile manipulation tasks like handling fragile objects), from

-

what’s been claimed or targeted (e.g., ambitious future actuator counts for next-gen hands).

For example, coverage of Tesla’s next hand targets has included a Musk claim that future Optimus hands/forearms could involve a much higher actuator count (reported as “50 actuators,” later clarified as distributed across forearm/hand). Treat that as an ambition/target, not a shipped spec. Humanoids Daily

The useful takeaway: Tesla is clearly prioritizing hands as a major bottleneck and is signaling aggressive iteration there.

Outdoor autonomy + dexterity together: what this enables (and what it doesn’t—yet)

What improved autonomy enables right now

If Optimus can walk more confidently on variable ground, Tesla can expand testing beyond perfectly flat indoor spaces. That matters because real deployment will require:

-

navigating ramps and uneven transitions,

-

safely moving around people,

-

and maintaining stability while carrying objects.

But “walking outside” is still not the same as “outdoor job-ready.” The gap is reliability:

-

Can it do it 1,000 times without falling?

-

Can it do it in rain, dust, low light?

-

Can it do it while carrying a load, turning, stopping abruptly?

The updates are meaningful progress, but not the finish line.

What improved dexterity enables right now

Delicate manipulation is a big deal for factory tasks like:

-

picking and placing varied objects,

-

sorting components,

-

packing,

-

and basic line-side assistance.

The moment a humanoid can repeatedly grasp objects of different shapes and materials, it becomes economically interesting—especially if it can be redeployed across tasks without specialized tooling.

What still blocks “general-purpose work”

Even with these improvements, real-world humanoid deployment typically fails on:

-

long-horizon planning (multi-step tasks),

-

recovery behaviors (dropping items, unexpected collisions),

-

safety certification and predictable behavior near humans,

-

maintenance (joints, gears, cabling, wear),

-

and cost at scale.

Tesla itself describes Optimus as requiring deep stacks for balance, navigation, perception, and interaction—suggesting they view it as a full autonomy problem, not just a mechanical one. Tesla

The bigger strategy: Tesla’s autonomy DNA applied to a body

Tesla’s “Autonomy at scale” message matters here. The company positions itself as building AI for vision and planning, supported by efficient inference, across vehicles and robots. Tesla

That implies Optimus is not a side project—it’s meant to inherit:

-

large-scale data collection thinking,

-

neural-network-first control,

-

and rapid iteration cycles.

If Tesla can translate even part of its vehicle autonomy pipeline to robotics—while solving the very different physics and safety challenges—Optimus could improve faster than teams that treat each robot as a one-off research platform.

What to watch next (practical signals, not hype)

If you want to track whether Optimus is truly getting closer to useful autonomy and dexterity, look for these “non-glamorous” indicators:

-

More varied terrain + longer continuous walks (minutes, not seconds) with fewer interventions.

-

Carrying while walking—locomotion degrades when the center of mass changes.

-

Tool-like interactions—turning knobs, using simple hand tools, opening/closing containers.

-

Repeatability—the same task performed reliably across many runs.

-

Recovery behaviors—controlled falls, stand-up sequences, graceful failure modes.

Tesla’s own stated improvement areas around vision integration, gait naturalness, responsiveness to commands, and fall/stand recovery map directly to this checklist. TESLARATI+1

Conclusion: These Optimus updates are meaningful, but the real test is reliability

Tesla Optimus showing improved outdoor autonomy—walking on uneven, graded terrain with neural control—and improved dexterity—tactile sensing and more capable manipulation—marks real progress in the two hardest parts of humanoid robotics. TESLARATI+2Electronic Specifier+2

The outdoor walking clips signal the robot is being trained for unstructured environments, not just showroom floors. The dexterity improvements signal Tesla understands that “useful work” is mostly a hands problem.

The next chapter isn’t about cooler demos. It’s about making these capabilities boringly reliable—day after day, task after task, without resets. If Tesla can get there, Optimus moves from “impressive prototype” to “deployable labor platform,” and that’s when the real impact begins.

For quick updates, follow our whatsapp –https://whatsapp.com/channel/0029VbAabEC11ulGy0ZwRi3j

https://bitsofall.com/deepfake-detection-ai/

https://bitsofall.com/gemini-3-flash-rapid-ai-apps/