Meta AI Proposes “Metacognitive Reuse”: Turning LLM Chains-of-Thought into a Procedural Handbook

Large language models (LLMs) have radically changed how machines solve problems: instead of giving a single answer, many modern models now generate chains of thought — step-by-step reasoning traces that make their process more interpretable and often more accurate. But chains of thought carry a cost. Long internal derivations are token-expensive, add latency, and can be wasteful because models frequently re-derive the same intermediate procedures across different problems. Meta AI’s recent work, which they call “Metacognitive Reuse”, proposes a pragmatic fix: let the model reflect on its own traces, extract recurring reasoning fragments as compact, named behaviors, store them in a searchable handbook, and then reuse them to make future reasoning faster and smarter. The results—according to the authors—are striking: large reductions in reasoning tokens and measurable accuracy gains in self-improvement settings. arXiv+1

The intuition: remember how you solved things, not just the answers

Imagine you’re solving lots of math contest problems. For many of them you repeatedly perform the same subroutine — say, convert a number between bases, or apply inclusion–exclusion to count sets. Writing out that subroutine in full each time is tedious and slows you down. A human expert would take notes: “Use base-conversion shortcut X” or “Apply inclusion–exclusion recipe Y.” That’s essentially what Metacognitive Reuse does for LLMs.

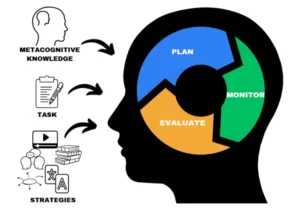

Instead of treating each chain-of-thought trace as an ephemeral, one-off computation, Meta’s pipeline mines past traces to find frequently used fragments, distills them into concise behaviors (a short name plus a one-line instruction), and stores them in a behavior handbook. At inference time, the LLM can either retrieve and condition on relevant behaviors from that handbook (behavior-conditioned inference, or BCI) or internalize those behaviors through fine-tuning (behavior-conditioned supervised fine-tuning, or BC-SFT). The handbook works like a procedural cheat-sheet the model can consult to avoid re-derivation. arXiv+1

How Metacognitive Reuse actually works — a high-level overview

The method has three core stages:

-

Trace collection: Let the model solve tasks and emit chains of thought. Collect these traces across many problems — these are the raw materials for metacognitive analysis. arXiv

-

Metacognitive mining: Use an LLM-driven reflection process to detect recurring fragments in traces, cluster similar fragments, and propose concise behavior summaries (e.g., a name and a one-line instruction). The pipeline filters for fragments that are useful, reusable, and concise. arXiv

-

Handbook use and internalization: Store the discovered behaviors in a searchable handbook. At inference you can (a) retrieve behaviors and present them in-context to the model (BCI), or (b) fine-tune the model on traces augmented with behavior annotations so the model internalizes those procedures (BC-SFT). Both approaches aim to convert slow derivations into fast procedural hints. arXiv

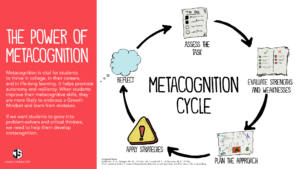

That metacognitive loop—solution → reflection → reusable procedure → reuse—is what the authors mean by metacognitive reuse: the model reasons about its own reasoning and transforms that insight into concrete shortcuts.

What Meta’s experiments show (short version)

Meta evaluated Metacognitive Reuse across multiple reasoning tasks and reported several notable outcomes:

-

Token efficiency: Providing the model with relevant behaviors in-context reduced the number of reasoning tokens by up to 46% while matching or improving baseline accuracy. That’s a huge win because tokens are the main cost driver in many LLM deployments. arXiv+1

-

Self-improvement: Without updating parameters, models that leveraged behaviors mined from their own past problem solving improved future performance — up to ~10% accuracy gains compared to a naive critique-and-revise baseline. This is “learning from yourself” via in-context behavioral memory. arXiv

-

Better SFT: When behavior-conditioned traces were used for supervised fine-tuning, the resulting models learned to reason more effectively than with vanilla SFT on the raw traces. In short: behavior-aware fine-tuning was more sample-efficient at turning non-reasoning models into reasoning models. arXiv

The paper demonstrates these gains on competitive reasoning benchmarks (examples include math datasets such as MATH and AIME), though the precise numbers and experimental details are in the preprint. arXiv

Why this matters: practical and conceptual implications

Lower costs, higher throughput

Reducing the tokens needed for reasoning has direct economic impact. For context, in many production settings the cost of using an LLM is strongly correlated with prompt + output token counts. Cut the number of reasoning tokens by tens of percent and you lower inference costs and latency. For latency-sensitive services (e.g., live tutoring, interactive assistants), shorter chains mean faster responses and a smaller contextual footprint, freeing space for exploration or longer-term context. MarkTechPost+1

Better lifelong learning for models

The “behavior handbook” is a simple form of memory that the model creates for itself from prior experience. This is a scalable route toward agents that incrementally accumulate reusable skills — not by purely changing parameters, but by building and consulting a memory of procedures. That kind of memory enables a form of metacognitive self-improvement where models get better at how they reason over time. arXiv

Interpretability and modularity

Behaviors are human-readable: a name and a short instruction. That makes the model’s latent procedural knowledge easier to inspect, audit, and curate. The handbook offers a middle ground between opaque parameterized knowledge and verbose chains of thought — concise, modular, and auditable procedures that humans can read and (potentially) edit. This could be useful for safety monitoring, curriculum design, and human-in-the-loop corrections. arXiv

Limitations and open questions

While promising, Metacognitive Reuse is not a silver bullet. Important caveats include:

-

Behavior discovery quality: The approach depends on reliably detecting genuinely reusable fragments in noisy chains-of-thought. If the mining step picks poor or brittle behaviors, the handbook can propagate mistakes or encourage overreliance on shortcuts that fail outside narrow contexts. Human curation or improved filtering strategies may be needed. arXiv

-

Distribution shift: Behaviors learned on one class of problems (e.g., combinatorics) might not transfer to another (e.g., geometric reasoning). The handbook will be most useful where recurring subroutines truly generalize. arXiv

-

Safety and misuse: Procedural memory can be powerful but also risky. Compact behaviors could be misassembled to give harmful outputs faster (e.g., automating chainable steps to bypass safeguards). Robust policy and guardrails will be necessary for deployment. arXiv

-

Computational trade-offs: Mining, clustering, and maintaining an evolving handbook introduces its own compute and storage costs. The net win depends on the application and the frequency of reused fragments. arXiv

How this fits into broader metacognition research

Metacognitive Reuse joins a growing body of work that gives models the ability to reason about their own reasoning. Researchers have explored metacognitive architectures, introspective agents, and mechanisms for self-evaluation and reflection. What’s distinct here is the practical focus: turning introspection into reusable, procedural artifacts that directly lower token costs and often improve accuracy. In other words, it’s not just theory — it’s an operational pattern that integrates reflection, memory, and proceduralization. arXiv+1

Community reactions have been quick: developer and research threads point out the technique’s elegance and immediate utility for cost-sensitive deployments, while others flag the need for careful curation and robustness tests before wide use. You can find summaries and early commentary across tech news and social platforms. MarkTechPost+1

Potential applications

Metacognitive Reuse is broadly applicable wherever models perform multi-step reasoning repeatedly:

-

Education & tutoring: Personal tutors could accumulate student-specific heuristics and reuse them to tailor explanations.

-

Coding assistants: Reusable code-transformation procedures or debugging heuristics could be stored and reapplied across similar tasks.

-

Automated research assistants: When performing multi-stage literature reviews or synthesis, procedural snippets (how to extract methodology sections, how to triangulate results) can be reused.

-

Scientific simulation reasoning: Recurrent analytic fragments in physics or chemistry reasoning could be memoized as behaviors for faster, more reliable calculations. arXiv

Practical steps for teams who want to experiment

If you want to try Metacognitive Reuse ideas in your own projects, a minimal roadmap would look like this:

-

Collect reasoning traces: Enable chain-of-thought outputs on a relevant workload and store those traces.

-

Mine recurring fragments: Use heuristic clustering or another model to find repeated substrings and candidate fragments. Ask an LLM to summarize and name those fragments into compact behaviors.

-

Build a handbook: Store behaviors with metadata (source traces, applicability tags, confidence).

-

Evaluate BCI: At inference, retrieve top-k relevant behaviors and prepend or interleave them into prompts; measure token counts, latency, and accuracy.

-

Optionally SFT: Fine-tune on behavior-annotated traces to internalize recurring procedures in parameters and compare.

-

Monitor and curate: Validate behavior correctness and prune brittle or dangerous behaviors regularly. arXiv

These steps echo the pipeline described in Meta’s work, but teams should adapt thresholds, retrieval strategies, and curation policies to their domain needs.

Final thoughts: a useful engineering pattern with long-term promise

Metacognitive Reuse is an appealing blend of introspective AI and pragmatic engineering. It channels the human habit of turning repeated mental work into compact procedural knowledge — recipe cards, checklists, and handbooks — and applies that to LLMs. Early experiments from Meta indicate meaningful cost and performance gains on benchmark reasoning tasks, and the idea dovetails with broader trends toward memory-augmented, self-improving agents.

If you’re building applications that rely on repeated multi-step reasoning, Metacognitive Reuse is worth a close look: it’s a conceptually simple yet practically powerful pattern for amplifying model efficiency, interpretability, and lifelong learning. Like any powerful pattern, it comes with caveats: behavior discovery quality, distributional robustness, and safety must be treated carefully. Still, this approach may be one of those engineering ideas that quietly moves from research paper to baseline in production stacks — a small architectural change that yields outsized returns. arXiv+1

Sources & further reading

-

Meta AI — Metacognitive Reuse: Turning Recurring LLM Reasoning Into Concise Behaviors (preprint). arXiv

-

MarkTechPost summary: Meta AI Proposes ‘Metacognitive Reuse’: Turning LLM Chains-of-Thought into a Procedural Handbook that Cuts Tokens by 46%. MarkTechPost

-

Community discussion and commentary on Reddit and other forums. Reddit+1

For quick updates, follow our whatsapp –https://whatsapp.com/channel/0029VbAabEC11ulGy0ZwRi3j

https://bitsofall.com/https-www-yourblogdomain-com-global-ai-trends-in-shopping/

https://bitsofall.com/https-yourdomain-com-google-gemini-in-chrome-future-of-ai-powered-browsing/

An Internet of AI Agents? Coral Protocol Introduces Coral v1

AI in Business & Cloud: Transforming Enterprises in the Digital Age