Meta AI Releases Omnilingual ASR — A Breakthrough for Speech Technology

On November 10–11, 2025, Meta’s Fundamental AI Research (FAIR) team published Omnilingual ASR, an open-source suite of automatic speech recognition models that dramatically expands the reach of speech-to-text technology. The headline claim is striking: Omnilingual ASR supports more than 1,600 languages (and can generalize to thousands more), including hundreds of low-resource languages that previously had little or no representation in modern ASR toolkits. Meta released models, a technical paper, a demo on Hugging Face, and a large accompanying corpus — all under permissive open licenses — so researchers, developers and communities can apply and extend the system immediately. GitHub+1

What exactly did Meta release?

Omnilingual ASR is not a single monolithic model but a family of models and assets:

-

A set of pretrained speech models spanning several architectures (wav2vec-style self-supervised encoders, CTC-based supervised models, and larger encoder–decoder LLM-style ASR models). Among these is a 7-billion-parameter multilingual audio representation model that Meta highlights as a core component. Venturebeat

-

A massive speech corpus — the Omnilingual ASR Corpus — containing hundreds of thousands of labeled audio segments covering ~348 underserved languages and many more in an extended set; the corpus is available on Hugging Face under a CC-BY-4.0 license and weighs in at nearly a terabyte in converted Parquet form. Hugging Face

-

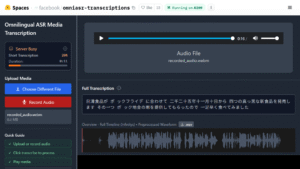

Code, model checkpoints, and inference examples published on Meta’s GitHub repo (facebookresearch/omnilingual-asr) and a demo on Hugging Face Spaces for quick experimentation. GitHub+1

The release is explicitly framed as open source and community-friendly: Meta used permissive licensing and documentation designed to let third parties adapt the stack, add new languages with just a few paired examples, and build production systems or research projects on top of the codebase. Venturebeat

Why this matters — the problem Omnilingual ASR addresses

Most mainstream ASR systems (popular open models and many commercial services) focus on a few dozen to a hundred high-resource languages. That leaves thousands of languages — many spoken by millions — outside the reach of accessible voice technology. This “long tail” has practical consequences: inaccessible educational content, exclusion from voice interfaces, under-preserved oral histories, and barriers to digital inclusion. Meta’s release tackles three major bottlenecks at once:

-

Coverage — by training on a vastly larger and more diverse multilingual corpus, Omnilingual ASR expands direct support to 1,600+ languages and is designed to generalize further. Venturebeat

-

Low-data adaptability — the system includes zero-shot or few-shot mechanisms that let the model transcribe languages it’s never explicitly seen at scale, using only a handful of paired examples to adapt at inference time. That reduces the need for large hand-labeled datasets for every language. Venturebeat

-

Open tooling — releasing code, checkpoints, and a corpus under permissive licenses removes legal and technical barriers to adoption by universities, local research labs, non-profits, and startups. GitHub+1

In short, Omnilingual ASR is an effort to extend the benefits of speech technologies to communities and languages historically left out of the AI roadmap. Analysts and coverage around the launch framed the release as a significant step toward equitable language technology. DeepLearning.ai+1

How Omnilingual ASR works — the technical outline

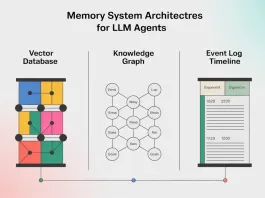

Meta’s release combines several complementary techniques rather than a single trick:

-

Massive, diverse pretraining: The models leverage hundreds of thousands (and in aggregate millions of hours) of audio examples across many languages to learn robust audio representations. Meta reports training on more than 4.3 million hours of audio across 1,600+ languages for parts of the stack. Venturebeat

-

Self-supervised learning (wav2vec family): Large wav2vec-style encoders learn language-agnostic acoustic representations that form the foundation for efficient downstream transcription. These encoders are offered in differing sizes (from hundreds of millions to billions of parameters) to trade off compute vs. accuracy. Venturebeat

-

CTC and encoder–decoder ASR heads: For supervised transcription there are CTC-based and encoder–decoder models; the latter yields higher accuracy for difficult languages and long contexts. GitHub

-

In-context / few-shot adaptation: One of the key innovations is an LLM-style approach to ASR where the model can be conditioned at inference time with a few (audio, text) pairs to transcribe unseen languages — analogous to in-context learning used in text LLMs. This enables zero-shot or few-shot extension without retraining. Venturebeat

Meta’s documentation breaks down model sizes, memory requirements, and inference recipes so developers can pick a model that suits desktop, cloud, or edge deployment scenarios. The GitHub README and inference guides are intentionally practical, with scripts to run batch transcriptions and to add new languages with minimal data. GitHub

How well does it perform?

Meta reports strong accuracy for a majority of supported languages: their 7B LLM-ASR model achieves character error rates (CER) below 10% for about 78% of the 1,600+ languages in their evaluation — a meaningful standard for intelligible transcriptions across many scripts and phonologies. For many low-resource languages that previously had zero ASR support, the system represents a first step toward usable text transcripts. GitHub+1

Performance will vary by language, domain, recording quality, and whether the language was heavily represented in training. The zero-shot/few-shot variants are particularly exciting because they lower the entry cost for adding languages, but they won’t magically match a model trained on hundreds of hours of curated in-language audio for every scenario. Real-world applications should expect to validate and fine-tune for use-case-specific reliability.

How this compares with other open ASR models

When compared with widely used open ASR systems like Whisper (OpenAI) or other research systems, Omnilingual ASR’s key differentiators are sheer language breadth, few-shot extensibility, and the scope of the accompanying corpus and tooling. Whisper popularized robust, generalist transcription across dozens of languages; Omnilingual ASR aims to push that envelope into the long tail of linguistic diversity and to provide an extensible engineering stack for communities to pick up and improve. Coverage numbers in recent coverage place Whisper at roughly a hundred languages, while Omnilingual pushes past 1,600, with claims of generalizing to 5,400+ via in-context learning. Venturebeat+1

Real-world applications and impact

The possibilities are broad:

-

Accessibility: Better captioning for schools, government broadcasts, and online video in local languages.

-

Cultural preservation: Digitization of oral histories, folklore, and endangered languages becomes more feasible.

-

Local NLP pipelines: Transcription enables downstream tasks (translation, information extraction, voice search) for languages that lacked data previously.

-

Voice interfaces: Assistants and IVR systems can reach speakers in their native tongues without months of data collection.

-

Research and education: Linguists and computational researchers get both tools and data to study phonology and language change at scale.

Because the corpus and models are open, NGOs, universities, and small companies gain a practical path to integrate speech tech locally without the licensing and cost concerns that can otherwise be prohibitive. Hugging Face+1

Ethical considerations, risks, and responsibilities

A release of this scope brings important ethical and policy questions that deserve careful attention:

-

Consent and data provenance — building massive multilingual corpora requires sensitive handling of consent, appropriate redaction, and respect for community norms. Meta’s dataset and repo provide some documentation on data sources and licenses, but implementers should review provenance and ensure community consent when deploying models in local settings. Hugging Face+1

-

Misuse risk — highly capable speech recognition can enable mass surveillance if misapplied. Open releases increase access to beneficial uses but also lower barriers for harmful applications; the community and policymakers must weigh safeguards, disclosures, and usage constraints. Venturebeat

-

Bias and fairness — models trained on skewed data (e.g., urban speakers, certain dialects) may underperform for marginalized speaker groups or dialectal variants. Projects should include localized evaluation and participatory testing with real speakers. Venturebeat

-

Economic and social effects — democratizing technology is broadly positive, but job and market impacts (e.g., on local transcriptionists) should be considered; ideally, open tools become a force multiplier for local capacity rather than displacement without recourse.

Meta’s open approach arguably enables external auditing and community remediation of many of these issues, but responsibility now shifts to the broader ecosystem — researchers, developers, civil society and governments — to use the resources with care.

How to try it today

Meta published code, model weights, and the Omnilingual ASR Corpus on GitHub and Hugging Face. If you want to experiment:

-

Visit the facebookresearch/omnilingual-asr GitHub repo for models and quickstart scripts. The README includes inference recipes and model size/perf tradeoffs. GitHub

-

Try the Hugging Face demo and browse the Omnilingual ASR Corpus dataset card to understand language coverage and licensing. The corpus is available under CC-BY-4.0 and includes a subset of ~348 underserved languages. Hugging Face

-

For production use, choose a model size that balances latency, memory, and accuracy; smaller wav2vec encoders are faster for edge devices, while the 7B LLM-ASR model is aimed at server/cloud accuracy. The repo includes tips for batching and language conditioning. GitHub+1

Because the release is permissively licensed, developers can integrate models into apps, research pipelines, and translation stacks without restrictive commercial limitations — a deliberate contrast to some earlier, more constrained open releases. Venturebeat

Limitations and what to watch next

Omnilingual ASR is a leap forward, but it’s not a silver bullet:

-

Per-language performance varies. Low error rates reported on many languages are promising, but expect variance across dialects, noisy environments, or domain-specific speech. Benchmarking in the target setting is essential. GitHub

-

Compute costs for best accuracy. The largest models demand substantial inference resources; practical deployments may need quantization, distillation, or hybrid architectures to run affordably at scale. Venturebeat

-

Quality of the corpus. While the Omnilingual ASR corpus is large and diverse, quality and metadata (speaker demographics, recording conditions) will shape downstream fairness and bias properties — documentation and community curation will be key. Hugging Face

Looking ahead, the most interesting developments will likely be community-led fine-tuning, toolkits for localized dataset collection and consent, and integrations that combine Omnilingual ASR with translation, retrieval, and voice UX frameworks.

Bottom line

Meta’s Omnilingual ASR is a landmark open-source effort to make speech recognition equitable across the world’s languages. By coupling a large multilingual model family with a substantial corpus and permissive licensing, Meta has handed researchers and communities the raw materials to build voice technology for languages that had been ignored by mainstream ASR. The release raises legitimate ethical and operational challenges — from consent to misuse risk — but the openness of the project creates an opportunity: researchers, civil society, and local technologists can inspect, extend, and correct the system in public. If the community seizes that opportunity, Omnilingual ASR could be a major step toward more inclusive, global voice technology. GitHub+2Hugging Face+2