Improved Understanding of Language Models

Introduction

Language is the foundation of human communication, shaping how we share ideas, knowledge, and culture. In the digital era, machines are being designed to understand, interpret, and generate human language with remarkable accuracy. This progress is driven by language models—powerful AI systems that can process and generate natural language.

From predictive text in smartphones to advanced conversational agents like ChatGPT, language models have transformed the way humans interact with machines. However, as these models become more complex, there is a growing need to improve our understanding of how they work, their strengths, their limitations, and their real-world implications.

This article explores the evolution of language models, their architecture, applications, challenges, and ongoing efforts to improve understanding. By the end, you’ll have a comprehensive picture of how language models are shaping the present and future of AI.

The Evolution of Language Models

Language models have come a long way from simple statistical methods to today’s massive deep learning architectures.

-

Early Statistical Models

-

Based on probability and frequency.

-

N-grams were widely used to predict the likelihood of a word given its predecessors.

-

Example: Predicting “cat” in the phrase “The black ___ sat on the mat”.

-

-

Neural Network-Based Models

-

Introduction of Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) models improved sequential text prediction.

-

These models handled context better than n-grams but struggled with long-term dependencies.

-

-

The Transformer Revolution

-

Introduced in 2017 by Vaswani et al. with the paper “Attention is All You Need.”

-

Transformers rely on self-attention mechanisms, allowing models to capture long-range dependencies and context.

-

This breakthrough laid the foundation for models like BERT, GPT, T5, and PaLM.

-

-

Large-Scale Pretrained Models

-

GPT-3, GPT-4, LLaMA, Claude, and Gemini represent the new wave of large language models (LLMs).

-

These models are pretrained on massive datasets and then fine-tuned for specific tasks.

-

They can generate essays, write code, translate languages, and even reason about problems.

-

How Language Models Work

At their core, language models operate on the principle of predicting the next word in a sequence. But the mechanics are far more complex.

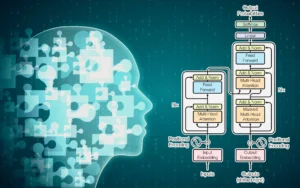

1. Tokenization

-

Language is broken into smaller units called tokens (words, subwords, or characters).

-

Example: “Understanding AI” → [“Understanding”, “AI”].

2. Embeddings

-

Tokens are mapped into high-dimensional vector spaces, capturing semantic meaning.

-

Words with similar meanings appear close in this space.

3. Self-Attention

-

The model determines which words in a sequence are most relevant to each other.

-

Example: In “The cat sat on the mat because it was tired,” the word “it” refers to “cat.”

4. Training

-

Models are trained on billions of sentences, adjusting billions of parameters to minimize prediction error.

-

Loss functions (e.g., cross-entropy loss) measure the difference between predicted and actual words.

5. Inference

-

During generation, the model predicts one token at a time, sampling from probability distributions.

-

Techniques like beam search, nucleus sampling, or temperature scaling control creativity and coherence.

Improved Understanding of Language Models

With models becoming larger and more powerful, researchers are focusing on interpretability, reliability, and alignment.

1. Interpretability

One challenge is that LLMs are often treated as “black boxes.” Improved understanding requires:

-

Attention visualization: Tracking which words influence predictions.

-

Neuron-level analysis: Discovering specialized neurons that capture grammar, facts, or sentiment.

-

Feature attribution: Identifying why a model chose a particular output.

2. Bias and Fairness

LLMs inherit biases from training data, which can lead to harmful outputs. Researchers work on:

-

Debiasing algorithms.

-

Careful curation of training datasets.

-

Evaluating fairness across languages, genders, and cultures.

3. Explainability for Users

Improving understanding also means helping non-technical users trust AI outputs.

-

Example: Instead of just giving an answer, an AI could explain its reasoning or cite sources.

4. Knowledge Representation

Language models don’t “know” facts in the human sense but store patterns in parameters.

Efforts to improve understanding include:

-

Probing tasks to measure how well models encode factual knowledge.

-

Hybrid approaches that combine LLMs with external knowledge bases.

Applications of Language Models

1. Conversational AI

-

Chatbots and virtual assistants (Siri, Alexa, ChatGPT).

-

Customer service automation.

2. Healthcare

-

Medical transcription, summarization of patient records.

-

Early diagnosis through analysis of patient-reported symptoms.

3. Education

-

AI tutors that adapt explanations to student levels.

-

Automated essay grading and personalized feedback.

4. Business and Productivity

-

Drafting emails, reports, and presentations.

-

Market research and trend prediction.

5. Programming

-

Tools like GitHub Copilot generate code snippets.

-

Debugging and optimization suggestions.

6. Creative Industries

-

Content creation: blogs, stories, music lyrics.

-

Game dialogue generation and virtual world-building.

Challenges and Limitations

Despite their power, LLMs face serious challenges.

1. Hallucinations

Models sometimes generate plausible-sounding but factually incorrect information.

2. Data Limitations

-

Training datasets may include outdated or biased information.

-

Sensitive data leaks are a concern.

3. Energy Consumption

Training large models consumes massive amounts of electricity and compute resources, raising sustainability concerns.

4. Ethical Concerns

-

Use in misinformation, propaganda, or malicious code generation.

-

Privacy risks from training on personal data.

5. Lack of True Understanding

LLMs don’t truly “understand” language like humans do—they are pattern matchers, not conscious thinkers.

Recent Advances in Improving Understanding

1. Fine-Tuning and Alignment

-

RLHF (Reinforcement Learning with Human Feedback) aligns models with human values.

-

Constitutional AI introduces rule-based self-alignment.

2. Multimodal Models

-

Integration of text, images, audio, and video (e.g., GPT-4o, Gemini).

-

Expands understanding beyond text.

3. Smaller Efficient Models

-

Efforts like DistilBERT, Alpaca, and Mistral make models more accessible.

-

Helps researchers study interpretability at smaller scales.

4. Tool-Augmented Models

-

Integration with search engines, calculators, and APIs.

-

Models can fetch real-time information instead of relying only on static training data.

5. Open-Source Research

-

Projects like Hugging Face’s Transformers library democratize access.

-

Enables collaborative understanding of model behavior.

The Future of Language Models

1. Towards AGI

LLMs are seen as stepping stones toward Artificial General Intelligence (AGI). Better understanding is crucial for safe development.

2. Regulation and Governance

Governments are drafting AI regulations to ensure transparency, fairness, and accountability.

3. Human-AI Collaboration

Future models will serve as co-pilots in creativity, science, and decision-making rather than replacements.

4. Neuro-Symbolic Models

Combining statistical learning with symbolic reasoning may lead to more interpretable, trustworthy AI.

5. Causal Understanding

Next-generation models may go beyond correlation to develop causal reasoning abilities.

Final Thoughts

The improved understanding of language models is not just a technical necessity but also a societal imperative. These models influence how we learn, work, and communicate. They power everyday applications, from personal assistants to advanced medical diagnostics.

Yet, they also raise ethical, environmental, and philosophical questions. What does it mean for a machine to “understand” language? How do we prevent misuse while ensuring equitable benefits?

Ongoing research into interpretability, fairness, efficiency, and multimodality is making language models more transparent, responsible, and aligned with human needs. As we deepen our understanding, language models will evolve from powerful tools into trusted partners that augment human intelligence responsibly.

https://bitsofall.com/https-www-yourwebsite-com-applications-in-science-and-medicine/

Automated Machine Learning (AutoML): Revolutionizing the Future of AI Development

Federated Learning: A New Era of Decentralized Machine Learning