Edge AI Hardware: Powering Intelligence at the Edge

Introduction

Artificial Intelligence (AI) has transformed nearly every aspect of modern technology, from cloud-based recommendation engines to virtual assistants. But in recent years, the demand for faster, localized, and energy-efficient AI processing has pushed innovation toward the edge of networks. Enter Edge AI Hardware—specialized devices designed to run AI workloads close to the data source, rather than relying solely on centralized cloud systems.

This shift is crucial in applications where latency, bandwidth, privacy, and reliability are non-negotiable. From autonomous vehicles and industrial automation to healthcare wearables and smart cameras, Edge AI hardware is redefining how we think about intelligence in connected devices.

In this article, we’ll explore what Edge AI hardware is, why it matters, its components, real-world applications, leading players, challenges, and what the future holds for this rapidly growing technology.

What is Edge AI Hardware?

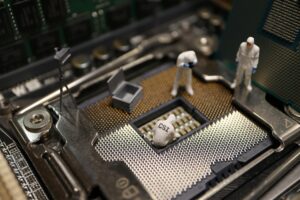

Edge AI hardware refers to processors, chips, and devices designed to perform AI inference and learning tasks directly on edge devices—such as smartphones, sensors, cameras, and IoT gateways—without sending all data back to the cloud.

Unlike traditional AI systems that depend heavily on centralized servers, edge AI hardware integrates low-power computing, specialized neural accelerators, and optimized architectures to process data in real time.

Key Characteristics

-

Low Latency: Enables instant decisions, critical in autonomous driving or robotic control.

-

Energy Efficiency: Optimized for low power consumption, enabling continuous AI processing in mobile or IoT devices.

-

On-Device Intelligence: Reduces reliance on cloud connectivity.

-

Scalability: Supports large deployments across thousands of sensors and devices.

-

Privacy & Security: Sensitive data stays on the device, minimizing risks.

Why Edge AI Hardware Matters

The traditional cloud-based AI model is powerful but comes with limitations:

-

Latency Issues: Sending data back and forth to cloud servers introduces delays.

-

High Bandwidth Costs: Constant data transmission strains networks.

-

Privacy Concerns: Sensitive personal or industrial data may be exposed.

-

Unreliable Connectivity: Cloud reliance falters in remote or mission-critical environments.

Edge AI hardware solves these issues by enabling real-time, secure, and cost-effective AI computation at the source of data.

Core Components of Edge AI Hardware

Edge AI systems are powered by a mix of specialized and general-purpose components:

1. CPUs (Central Processing Units)

Traditional processors remain a backbone, handling general tasks, though less optimized for deep learning workloads.

2. GPUs (Graphics Processing Units)

GPUs excel at parallel computing, making them effective for AI inference. Many edge devices integrate compact, power-efficient GPUs for AI acceleration.

3. NPUs (Neural Processing Units)

NPUs are AI-specific accelerators optimized for neural network computations. These chips dramatically improve inference speeds while consuming less power.

4. TPUs (Tensor Processing Units)

Originally developed by Google, TPUs are designed for tensor operations common in AI workloads. Some TPU variants are optimized for edge deployments.

5. FPGAs (Field-Programmable Gate Arrays)

FPGAs provide flexible, reconfigurable AI acceleration, often used in industrial and defense applications where customization is vital.

6. ASICs (Application-Specific Integrated Circuits)

ASICs are highly optimized, fixed-function chips designed for maximum efficiency in specific AI tasks (e.g., Google Edge TPU).

7. Memory & Storage

Edge AI hardware requires fast, low-latency memory (e.g., LPDDR5, HBM) to support real-time inference. On-device storage ensures local processing.

8. Connectivity Modules

Support for Wi-Fi, 5G, and edge-to-cloud integration ensures devices can still sync with broader AI ecosystems when needed.

Examples of Edge AI Hardware in Action

1. Smartphones

Modern smartphones, such as those with Apple’s Neural Engine or Qualcomm’s Hexagon DSP, include built-in AI accelerators to power real-time photography enhancements, speech recognition, and AR experiences.

2. Autonomous Vehicles

Cars use NVIDIA DRIVE chips and similar processors to process sensor data locally for navigation, object detection, and safety-critical decisions.

3. Healthcare Devices

Wearables like smartwatches use on-device AI to detect heart anomalies, track activity, and even predict health risks—all without cloud dependency.

4. Surveillance Cameras

AI-powered CCTV cameras use on-device inference to detect unusual activity, recognize faces, or trigger alerts in real time.

5. Industrial IoT

Factories employ edge AI hardware for predictive maintenance, anomaly detection, and robotics control without requiring constant cloud connectivity.

Leading Players in Edge AI Hardware

Several companies are at the forefront of edge AI innovation:

-

NVIDIA: Jetson series for robotics, drones, and industrial AI.

-

Google: Edge TPU for IoT and embedded AI applications.

-

Intel: Movidius VPU (Vision Processing Unit) for computer vision tasks.

-

Qualcomm: Snapdragon AI Engine for smartphones and wearables.

-

Apple: Neural Engine integrated in A-series and M-series chips.

-

ARM: Provides AI-optimized architectures widely used in edge processors.

-

Hailo & Mythic: Startups creating ultra-efficient edge AI accelerators.

Advantages of Edge AI Hardware

-

Ultra-low Latency: Critical in autonomous driving, drones, and robotics.

-

Reduced Costs: Saves bandwidth and cloud processing costs.

-

Enhanced Privacy: Sensitive data remains on-device.

-

Energy Efficiency: Optimized for power-constrained environments.

-

Reliability: Works even with intermittent or no connectivity.

-

Scalability: Easier deployment across massive IoT ecosystems.

Challenges in Edge AI Hardware

While promising, edge AI hardware still faces hurdles:

-

Limited Processing Power: Compared to cloud GPUs/TPUs.

-

Heat Dissipation: High-performance chips in small devices face thermal limits.

-

Software Ecosystem Fragmentation: Lack of universal frameworks for development.

-

Security Risks: Devices at the edge are vulnerable to physical and cyberattacks.

-

Update & Maintenance: Managing firmware/software across thousands of devices is complex.

Edge AI Hardware Across Industries

1. Healthcare

AI-powered diagnostic devices, portable imaging tools, and remote patient monitoring systems rely on edge hardware for fast, secure analysis.

2. Automotive

Autonomous driving, ADAS (Advanced Driver-Assistance Systems), and in-cabin monitoring depend on edge processors to ensure passenger safety.

3. Retail

Smart shelves, cashier-less checkout systems, and personalized recommendations in stores use edge-based vision and analytics.

4. Agriculture

Drones and smart sensors equipped with AI hardware help detect crop diseases, monitor soil health, and optimize irrigation.

5. Smart Cities

Traffic lights, surveillance, and energy systems incorporate edge AI hardware to improve efficiency and safety.

6. Manufacturing

Predictive maintenance, defect detection, and robotic automation thrive on real-time processing at the edge.

Future of Edge AI Hardware

The future of edge AI hardware will be defined by several trends:

-

5G Integration: Faster connectivity will enhance hybrid edge-cloud AI.

-

Energy-Harvesting Chips: Ultra-low-power hardware running on renewable or ambient energy.

-

TinyML Growth: Running machine learning models on microcontrollers for ultra-small devices.

-

Quantum Edge AI (Long-Term): Exploration of quantum-inspired architectures at the edge.

-

Federated Learning: On-device AI training where data never leaves the device, boosting privacy.

-

AI at the Sensor Level: Chips embedded directly into sensors, eliminating intermediate devices.

Conclusion

Edge AI hardware is rapidly emerging as the backbone of next-generation intelligent systems. By bringing computation closer to the data source, it unlocks real-time performance, enhances privacy, and reduces reliance on costly cloud resources. From healthcare and automotive to retail and smart cities, its impact is profound and growing.

As hardware continues to evolve—driven by innovations in NPUs, ASICs, and ultra-low-power chips—the line between the cloud and the edge will blur. The future will be a hybrid world where intelligence flows seamlessly across devices, edge nodes, and cloud platforms, enabling smarter, safer, and more efficient societies.

https://bitsofall.com/google-search-ai-mode/

Meta’s Translation Features: Breaking Language Barriers in the Digital Age

AI Memory Architecture: Building the Brain of Intelligent Machines