Decision Tree: A Complete Guide to One of the Most Powerful Algorithms in Machine Learning

Meta Description

“Decision Tree: A complete guide to understanding how decision trees work, their advantages, applications, and role in machine learning and AI.”

Introduction

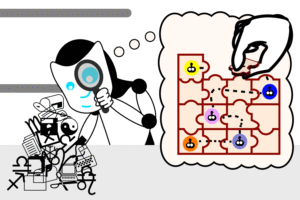

When we talk about machine learning algorithms, one of the simplest yet most powerful models that often comes up is the Decision Tree. Imagine you are deciding whether to go outside today. You might ask yourself questions like: Is it raining? Do I have an umbrella? Is it cold? Depending on the answers, you’ll arrive at a final decision—whether to go outside or stay indoors.

This process of asking questions and branching out based on answers is exactly what a decision tree does. It is a supervised learning algorithm used for both classification and regression tasks. Due to its simplicity, interpretability, and ability to mimic human reasoning, decision trees have become a fundamental part of data science and artificial intelligence.

In this article, we’ll explore everything about decision trees—how they work, their structure, advantages, disadvantages, real-world applications, and why they continue to play a vital role in AI.

What is a Decision Tree?

A Decision Tree is a tree-structured algorithm where:

-

Each internal node represents a decision based on a feature (e.g., “Is age > 18?”).

-

Each branch represents the outcome of that decision (Yes/No).

-

Each leaf node represents the final outcome or prediction (e.g., “Approve loan” or “Deny loan”).

It looks like an inverted tree where the root node is at the top and the branches flow downward.

For example:

-

Root node: “Does the customer have good credit history?”

-

If YES → Check income.

-

If NO → Reject loan.

-

If income is high → Approve loan.

-

If income is low → Reject loan.

-

This structure makes decision trees highly interpretable—almost like following a flowchart.

Types of Decision Trees

Decision trees are not one-size-fits-all; there are different types depending on the task:

-

Classification Trees

-

Used when the output is categorical (Yes/No, Spam/Not Spam, Fraud/Not Fraud).

-

Example: Predicting whether a student passes or fails an exam.

-

-

Regression Trees

-

Used when the output is continuous (e.g., predicting salary, house price, temperature).

-

Example: Estimating the price of a car based on mileage and age.

-

How Does a Decision Tree Work?

The key idea behind decision trees is recursive partitioning—splitting data into subsets based on certain conditions until the outcome is decided.

Steps Involved:

-

Choose the best feature to split the data.

-

Algorithms like Gini Impurity, Entropy (Information Gain), or Variance Reduction decide which feature provides the best split.

-

-

Create a node for that feature.

-

Example: Split based on “Age > 30.”

-

-

Split the dataset into subsets.

-

One subset for Age > 30 and one for Age ≤ 30.

-

-

Repeat recursively.

-

Each subset is further split until we reach leaf nodes (final outcomes).

-

-

Stop when:

-

All samples at a node belong to the same class.

-

Maximum tree depth is reached.

-

No further split improves the model.

-

Key Concepts in Decision Trees

1. Gini Impurity

-

Measures how often a randomly chosen sample would be incorrectly classified.

-

A lower Gini value means a purer node.

2. Entropy & Information Gain

-

Entropy measures randomness in data.

-

Information Gain measures reduction in entropy after splitting.

-

Decision trees try to maximize information gain.

3. Overfitting and Pruning

-

A tree that grows too deep can memorize training data (overfitting).

-

Pruning techniques (pre-pruning and post-pruning) reduce complexity by removing unnecessary branches.

Advantages of Decision Trees

-

Easy to Interpret – The tree structure is simple and human-readable.

-

Handles Both Types of Data – Works with categorical and numerical data.

-

No Feature Scaling Needed – Unlike SVM or KNN, decision trees don’t require normalization.

-

Nonlinear Relationships – Captures complex patterns in data.

-

Robust to Missing Data – Can handle incomplete values during splits.

-

Versatile – Can be used for classification and regression tasks.

Disadvantages of Decision Trees

-

Overfitting – Trees can become too specific to training data.

-

Instability – Small changes in data can drastically change the tree structure.

-

Biased Splits – Features with more categories tend to dominate splits.

-

Not Always Optimal – Trees may not perform as well as ensemble methods.

Techniques to Improve Decision Trees

-

Random Forest – Combines multiple decision trees to reduce overfitting and improve accuracy.

-

Gradient Boosting – Builds trees sequentially, each correcting the errors of the previous one.

-

XGBoost / LightGBM / CatBoost – Advanced boosting algorithms that enhance decision tree performance.

-

Feature Engineering – Transforming data (e.g., encoding categorical variables) improves splits.

-

Regularization – Restricting tree depth, minimum samples per leaf, or using pruning prevents over-complexity.

Real-World Applications of Decision Trees

Decision trees are widely used across industries because they are intuitive and efficient.

1. Finance

-

Credit scoring and loan approval.

-

Fraud detection.

2. Healthcare

-

Diagnosing diseases based on symptoms.

-

Predicting patient survival rates.

3. Marketing

-

Customer segmentation.

-

Predicting churn (whether a customer will leave).

4. Retail & E-commerce

-

Recommendation engines.

-

Predicting demand for products.

5. Manufacturing

-

Quality control.

-

Predictive maintenance.

6. Education

-

Student performance prediction.

-

Personalized learning systems.

Decision Tree vs Other Algorithms

| Aspect | Decision Tree | Logistic Regression | Neural Networks | Random Forest |

|---|---|---|---|---|

| Interpretability | High | Medium | Low | Medium |

| Speed | Fast | Fast | Slower | Slower |

| Overfitting | High (if deep) | Medium | High | Low |

| Accuracy | Medium | Medium | High | High |

| Use Case | Simple, explainable models | Binary classification | Complex tasks (vision, NLP) | Robust predictions |

Building a Decision Tree: Step-by-Step Example

Let’s say we want to predict whether a person will play tennis based on weather conditions.

Dataset:

| Outlook | Temperature | Humidity | Wind | Play Tennis |

|---|---|---|---|---|

| Sunny | Hot | High | Weak | No |

| Sunny | Hot | High | Strong | No |

| Overcast | Hot | High | Weak | Yes |

| Rain | Mild | High | Weak | Yes |

| Rain | Cool | Normal | Weak | Yes |

| Rain | Cool | Normal | Strong | No |

| Overcast | Cool | Normal | Strong | Yes |

| Sunny | Mild | High | Weak | No |

| Sunny | Cool | Normal | Weak | Yes |

| Rain | Mild | Normal | Weak | Yes |

Step 1: Calculate Entropy of Target Variable

-

Entropy measures randomness.

-

For “Play Tennis” → Yes = 6, No = 4.

Step 2: Choose the Attribute with Highest Information Gain

-

Outlook provides the best split (Sunny, Overcast, Rain).

Step 3: Build Tree Recursively

-

If Outlook = Overcast → Always Yes.

-

If Outlook = Rain → Depends on Wind.

-

If Outlook = Sunny → Depends on Humidity.

Final Decision Tree:

-

Outlook

-

Overcast → Yes

-

Rain → (Wind = Weak → Yes, Strong → No)

-

Sunny → (Humidity = High → No, Normal → Yes)

-

This simple tree can accurately predict whether a person will play tennis.

Tools and Libraries for Decision Trees

-

Python Libraries

-

scikit-learn (

DecisionTreeClassifier,DecisionTreeRegressor) -

XGBoost, LightGBM, CatBoost

-

-

Visualization Tools

-

Graphviz

-

Matplotlib / Seaborn

-

-

Business Tools

-

SAS Enterprise Miner

-

RapidMiner

-

KNIME

-

Future of Decision Trees in AI

While deep learning often steals the spotlight today, decision trees remain crucial because:

-

They form the backbone of ensemble methods like Random Forest and Gradient Boosting.

-

They are explainable, which is essential in responsible AI and regulatory environments (finance, healthcare).

-

They work well with tabular data, where deep learning is not always superior.

As AI continues to evolve, decision trees will stay relevant—especially in applications where transparency and interpretability are non-negotiable.

Final Thoughts

The Decision Tree is a timeless algorithm in machine learning. Its power lies in its simplicity, interpretability, and versatility. From predicting medical outcomes to driving financial decisions, decision trees serve as the foundation for some of the most advanced AI models we use today.

Whether you are a beginner exploring data science or a professional building advanced AI systems, understanding decision trees is essential. They are not just an academic concept but a real-world tool shaping industries, businesses, and everyday life.

https://bitsofall.com/focus-on-data-quality/