MoonshotAI Released Checkpoint-Engine: Fast, Lightweight Middleware to Update LLM Weights

Published: September 2025

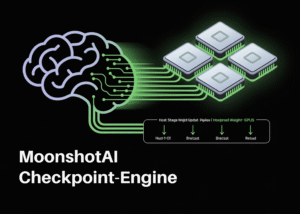

MoonshotAI has open-sourced checkpoint-engine, a lightweight middleware designed to update model weights in LLM inference fleets quickly and with minimal disruption — a capability aimed squarely at making reinforcement-learning fine-tuning (and other dynamic-update workflows) practical at massive scale. This article explains what checkpoint-engine does, why it matters for large models (including Moonshot’s own Kimi K2), how it works at a high level, use cases, and the operational and research implications for teams deploying LLMs. Reuters+4GitHub+4MarkTechPost+4

Why updating weights in production is harder than it sounds

For small models or single-server setups, replacing model checkpoints is straightforward: stop the process, swap files, restart. But modern LLM deployments are rarely that forgiving:

-

Large models are sharded across many GPUs and many machines; replacing weights means synchronizing huge tensors across a distributed system.

-

High-availability inference services can’t just stop serving—downtime costs money and user trust.

-

Reinforcement learning and other online training workflows may require frequent, small updates to weights (or entire new checkpoints) while inference continues.

Those constraints make “safe, fast, in-place weight updates” a systems engineering challenge: you need low latency, minimal memory copying, atomic behaviour across many devices, and low disruption to inference throughput. MoonshotAI’s checkpoint-engine tackles precisely these pain points. GitHub+1

What is checkpoint-engine?

checkpoint-engine is an open-source, lightweight middleware released by MoonshotAI that provides mechanisms to update model weights inside LLM inference engines with minimal interruption. It’s intended to be inserted between model storage/checkpoint artifacts and the serving layer, coordinating how new weight data is transmitted and swapped into memory across a large GPU cluster. The project is available on GitHub under permissive licensing with documentation and examples. GitHub

Key claims from MoonshotAI and reporting:

-

It can update a 1-trillion-parameter model (Moonshot’s Kimi-K2) across thousands of GPUs in roughly 20 seconds using their approach. GitHub+1

-

The middleware supports at least two update strategies: Broadcast (synchronous, coordinated update across replicas) and P2P (peer-to-peer), which is useful when instances are added dynamically. MarkTechPost+1

These are ambitious performance numbers — the project repo and community posts provide technical notes and benchmarks you can inspect if you plan to adopt it. GitHub+1

How it works — high level (no heavy math)

Checkpoint-engine focuses on systems optimizations rather than novel training algorithms. At a high level:

-

In-place weight updates — instead of saving a new checkpoint to disk and restarting processes, checkpoint-engine coordinates an in-memory swap: the engine transfers new tensor values into the exact memory regions used by the running inference processes so the model seamlessly continues to serve with updated weights. This reduces copying and restart overhead. GitHub

-

Efficient tensor transfer — the implementation leverages fast inter-process and inter-GPU transfer mechanisms (e.g., CUDA IPC and optimized network transfers) to move tensor shards quickly between machines and GPUs. That’s how Moonshot claims extreme speed even for trillion-parameter models. moonshotai.github.io+1

-

Update protocols — the middleware exposes different patterns: a coordinated broadcast for synchronous fleets where all replicas must match, and P2P modes for rolling updates or when nodes join/leave dynamically. These modes let operators balance consistency, latency, and compatibility with dynamic clusters. MarkTechPost+1

-

Lightweight orchestration — checkpoint-engine intentionally keeps orchestration simple — the repo emphasizes a compact codebase and pragmatic integration points for existing inference stacks. That lowers adoption friction for teams already using common open-source inference engines. GitHub

In short: it’s a network + memory orchestration tool tailored to the problem of swapping huge weight tensors while serving traffic.

Why this matters (use cases)

-

Reinforcement learning from human feedback (RLHF) and online RL: RL workflows often produce frequent checkpoint updates. Fast, in-place updates mean you can deploy policy changes quickly without a costly redeploy cycle — shortening the loop between training signals and production behavior. MarkTechPost

-

A/B testing and rapid iteration: Teams can push updated weights to a subset of instances to evaluate differences in latency, safety, or output quality without needing multiple full model deployments.

-

Live safety patches / bias fixes: If a model exhibits a harmful behavior discovered in production, operators can distribute a corrected checkpoint to running servers fast, minimizing the exposure window.

-

Large distributed models (MoE and beyond): Moonshot’s own Kimi-K2 is a mixture-of-experts (MoE) design with many parameter shards; the faster you can sync shards, the more tractable large-scale experimentation and rolling updates become. GitHub+1

-

Cost and availability benefits: Reducing restart/reload time saves GPU utilization and maintains steady throughput for latency-sensitive applications.

Practical adoption: what operators need to know

If you run or manage LLM inference, here are practical points to consider before integrating checkpoint-engine.

-

Compatibility with your inference stack: checkpoint-engine is middleware — it integrates with popular inference engines but you’ll need to map your model’s sharding/layout and memory structures to the engine’s expectations. Check the repo for supported engines and examples. GitHub

-

Operational semantics: decide whether you need strict global consistency (everyone must see the same weights immediately) or eventual/rolling updates (some instances updated sooner). Broadcast offers stronger consistency; P2P supports dynamic scaling. MarkTechPost

-

Network and hardware requirements: the speed claims rely on high-throughput networking and GPU IPC. If your cluster is not similarly provisioned, your update wall-clock time will differ. Plan for sufficient interconnect bandwidth and test with representative workloads. moonshotai.github.io

-

Testing and verification: swapping weights in memory is powerful but risky — add verification steps (checksum, small-batch inference checks) to validate that the updated model behaves as expected before routing full traffic.

-

Licensing and security: the repo is open-source (MIT), but treat production integration like any critical system change: code-review, threat model the update path, and isolate update control planes. GitHub

The Kimi connection: why Moonshot built this

MoonshotAI’s Kimi model series — Kimi-K1.5 and Kimi-K2 — are large, agentic models (K2 reported as a MoE setup with ~1T parameters) where large-scale, frequent weight updates are central to their training and deployment strategies. The company’s paper/code releases earlier in 2025 emphasized long-context reasoning, Muon optimizer contributions, and tooling for large models; checkpoint-engine appears to be an infrastructure component born out of the team’s operational needs. Open-sourcing the tool helps other teams suffering the same update problem while offering Moonshot beneficial external scrutiny and adoption. Hugging Face+2GitHub+2

Benchmarks and realism check

Moonshot’s reported benchmark — updating a 1T parameter model across thousands of GPUs in ~20 seconds — is impressive but should be viewed in context:

-

The benchmark depends heavily on cluster topology (network fabric, NICs, GPU interconnects), model sharding strategy, and the update pattern used. Real-world latencies will vary. Reddit+1

-

Independent community reproductions and experiments are key. The GitHub repo, community posts, and discussions (Reddit, X/Threads) provide early reports and user feedback; teams should run controlled tests in staging before trusting such timelines in production. GitHub+1

-

Even if your environment cannot hit 20s for a 1T model, the architectural idea — in-place updates with efficient transfer mechanisms — often yields order-of-magnitude improvements over naïve restart/reload approaches.

How to get started (links and quick steps)

MoonshotAI has published the repository and supporting material publicly. High-level steps to experiment:

-

Read the repo and docs on GitHub (examples, README, API). GitHub

-

Clone and run in a test cluster: try a small model or a sharded toy model first to validate integration points. The repo emphasizes a minimal integration surface for common inference engines. GitHub

-

Test update modes: run both Broadcast and P2P modes to see which fits your operational model. Monitor latency, error rates, and any transient behavior. MarkTechPost

-

Scale up with instrumentation: add verification checks (checksum, sample inferences) and progressively increase model/shard size while measuring update time and throughput impact. apidog

(Direct links: MoonshotAI’s GitHub checkpoint-engine repo is available for cloning and experimentation.) GitHub

Risks, open questions, and community concerns

Open-sourcing infrastructure that touches model internals helps transparency but raises operational and safety questions:

-

Atomicity and consistency: ensuring truly atomic swaps across thousands of devices is tricky. Race conditions or partially applied updates could produce inconsistent behavior across replicas. That’s why the project supports multiple update modes and why verification after deployment is essential. MarkTechPost

-

Attack surface: the update path becomes a high-value control plane. If an attacker can intercept or inject updates, the consequences are severe. Security hardening of the update transport and authorization is non-negotiable. GitHub

-

Reproducibility of speed claims: the community will and should validate Moonshot’s 20s benchmark under different infrastructure conditions. Independent results will determine how generalizable that number is. Reddit

-

Ecosystem compatibility: every inference engine has its own layout and memory model. The ease of integrating checkpoint-engine into custom stacks will influence adoption speed. GitHub

Bigger picture: what this signals about ML infrastructure

Moonshot’s release is part of a broader trend: the ML community is increasingly open-sourcing not only models but also the heavy infrastructure around training, serving, and scaling. That trend has three effects:

-

Faster innovation and reproducibility: shared infrastructure tools let more teams iterate on both research and ops ideas without reinventing low-level plumbing. GitHub

-

Arms race in systems engineering: as models scale, systems optimizations (transfer protocols, memory layouts, IPC tricks) become differentiators — not just model architecture. Tools like checkpoint-engine codify those optimizations. moonshotai.github.io

-

Greater scrutiny and collaboration: open source invites audits, improvements, and new features from the community — accelerating maturation. That’s particularly valuable for safety-critical mechanisms like live weight updates. GitHub

Final verdict: who should care?

-

ML infra teams operating large LLM fleets (especially with RLHF or online training) — high priority to evaluate.

-

Researchers experimenting with frequent fine-tuning or policy updates — checkpoint-engine can shorten iteration cycles.

-

Startups and smaller teams should take the ideas (fast in-place swapping, efficient transfer) and test them on their scale; even smaller clusters can benefit from more efficient update mechanisms.

-

Security and compliance teams — the control plane for weight updates is critical and must be hardened before production use.

MoonshotAI’s checkpoint-engine is a pragmatic, systems-first contribution to the LLM ecosystem. It doesn’t change model math, but it changes how quickly and safely models can evolve in production — and that operational capability can be as important as incremental gains in model quality.

Resources & further reading

-

checkpoint-engine — MoonshotAI GitHub repo (source, README, license). GitHub

-

MarkTechPost article summarizing the release and features. MarkTechPost

-

Community posts and early benchmarks (Reddit / APIdog blog posts) with preliminary reproductions. Reddit+1

-

MoonshotAI’s Kimi-K2 checkpoints and deployment notes (Hugging Face). Hugging Face

-

Reuters background on MoonshotAI and its Kimi family (company context). Reuters

For quick updates, follow our whatsapp –

https://whatsapp.com/channel/0029VbAabEC11ulGy0ZwRi3j

Google AI Ships TimesFM-2.5: A Smaller, Faster, Longer-Context Foundation Model for Forecasting