Building AI Agents Is 5% AI and 100% Software Engineering

Introduction

Artificial Intelligence (AI) agents have become the buzzword of 2025. From autonomous research assistants to AI-driven customer support, businesses and individuals are racing to build systems that can reason, act, and interact like humans. Yet, many developers entering this space quickly realize something surprising: building AI agents is not just about integrating the latest Large Language Model (LLM) or machine learning framework.

In fact, as the tongue-in-cheek saying goes, “Building AI agents is 5% AI and 100% software engineering.” While AI provides the reasoning engine, most of the complexity lies in scaffolding, orchestration, integration, and deployment. It’s not the AI model alone that makes the system powerful—it’s the architecture, infrastructure, and software engineering discipline around it.

This article explores why AI is only a small slice of the puzzle, how software engineering dominates the process, and what practices developers need to adopt to succeed in building reliable, scalable, and trustworthy AI agents.

The Myth of “Just Add AI”

When OpenAI, Anthropic, Google, and others started releasing powerful LLMs, many believed that building an AI agent was as simple as plugging into an API.

-

Want a research assistant? Just call GPT-5.

-

Need a customer support bot? Pipe your queries through Claude.

-

Looking for an autonomous trading system? Hook ChatGPT up to an exchange API.

But reality quickly caught up. Developers discovered that raw AI is messy:

-

Models hallucinate facts.

-

Context windows are limited.

-

Responses are unpredictable.

-

Scaling across millions of users introduces cost and latency issues.

Suddenly, the majority of the work wasn’t about AI—it was about designing robust systems to manage AI’s unpredictability.

The 5%: Where AI Actually Matters

Before we dive into the 100% software engineering part, let’s give AI its due credit. The 5% AI component usually involves:

-

Choosing the Model

-

Should you use GPT-5, Claude 3.5, Gemini, or a fine-tuned LLaMA variant?

-

Trade-offs between reasoning power, latency, cost, and context size are critical.

-

-

Prompt Engineering & Instruction Tuning

-

Crafting structured prompts.

-

Adding system rules to guide outputs.

-

Fine-tuning or few-shot prompting for specific domains.

-

-

Evaluation of Outputs

-

Accuracy, relevance, and safety must be validated.

-

Models need guardrails to reduce hallucinations.

-

-

Experimenting with Multi-Agent Collaboration

-

Designing AI “teams” where specialized agents collaborate.

-

Requires a balance between autonomy and human oversight.

-

This slice—while small—is where AI research intersects with application. But it only scratches the surface.

The 100%: Software Engineering in AI Agents

Here’s where the real grind begins. For every shiny AI demo, there’s a mountain of engineering powering it behind the scenes. Let’s break down the software engineering heavy-lifting.

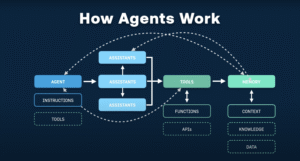

1. Architecture & Orchestration

AI agents are not monoliths. They’re ecosystems of services, APIs, and workflows.

-

Orchestration frameworks like LangChain, LlamaIndex, Haystack, or custom-built middleware handle planning, tool-calling, and state management.

-

Task decomposition requires careful design: when should an agent act autonomously vs. escalate to a human?

-

Concurrency & parallelization are vital when agents need to run multiple reasoning paths at once.

Without a solid architecture, AI becomes a toy rather than a reliable system.

2. Data Infrastructure

Agents thrive on data. But feeding them information is more than dumping PDFs into a vector database.

-

ETL pipelines to clean and structure data.

-

Vector databases (e.g., Pinecone, Weaviate, FAISS) for semantic retrieval.

-

Metadata enrichment for relevance ranking.

-

Caching mechanisms to reduce repetitive calls and costs.

Every successful agent is built on a backbone of structured, well-engineered data flows.

3. Tool Integration & APIs

AI agents are useless without the ability to act. That means integrating them with real-world tools:

-

Browsers (for research).

-

Databases (for enterprise apps).

-

CRM and ERP systems (for sales/operations).

-

Custom APIs (for niche use cases).

Each integration requires robust error handling, retries, authentication management, and security safeguards.

4. Error Handling & Reliability

AI is probabilistic, not deterministic. That means your software must assume things will go wrong.

-

What if the AI returns an invalid JSON?

-

What if latency spikes?

-

What if the API quota is exceeded?

Robust error-handling layers, fallback strategies, and monitoring systems are what turn fragile prototypes into production-grade agents.

5. Security & Compliance

Security is often overlooked in AI hype cycles, but it’s non-negotiable in production.

-

Data Privacy: Agents often process sensitive data (customer queries, financial records, health info).

-

Access Control: Preventing unauthorized actions through AI.

-

Adversarial Attacks: Prompt injections and jailbreaks are real threats.

-

Regulatory Compliance: GDPR, HIPAA, and upcoming AI laws (like the EU AI Act) must be considered.

This requires cybersecurity expertise and careful design—far beyond “just AI.”

6. Testing & Evaluation Pipelines

In classical software, unit tests ensure predictability. With AI, unpredictability is the default.

-

Regression testing for prompts and responses.

-

A/B testing different model strategies.

-

Automated eval frameworks (e.g., using another LLM as a judge).

-

Red-teaming to expose vulnerabilities.

This iterative loop is pure software engineering discipline.

7. Deployment & Scaling

Once built, agents must handle real-world workloads.

-

Containerization (Docker/Kubernetes): For portability.

-

Load Balancing: Distributing requests across multiple models/services.

-

Cost Optimization: Using smaller models when possible, caching results, throttling expensive API calls.

-

Observability: Logs, traces, and metrics to understand agent behavior at scale.

Without DevOps rigor, even the smartest agent will collapse under production pressure.

8. Human-in-the-Loop Systems

True autonomy is rare—and often undesirable. AI agents need human oversight.

-

Review queues for sensitive actions (contracts, financial transfers).

-

Escalation mechanisms when confidence is low.

-

Feedback loops to continuously improve agent reasoning.

This is not AI—it’s workflow design, UI/UX, and software orchestration.

Case Studies: AI Agent Projects in the Wild

1. Customer Support Agents

Companies deploying AI-driven chat agents quickly discovered that LLMs hallucinate policies or product details. The solution?

-

Retrieval-Augmented Generation (RAG).

-

Extensive fallback to human operators.

-

Rigorous monitoring pipelines.

2. Autonomous Research Agents (AutoGPT, BabyAGI)

Early “autonomous” projects looked exciting but failed in production because they lacked error handling, memory, and resource constraints. It wasn’t the AI that failed—it was the engineering scaffolding around it.

3. Enterprise AI Assistants

Microsoft Copilot, Google Workspace AI, and similar tools rely on deep integration into enterprise systems. The majority of work lies in syncing calendars, parsing emails, respecting permissions—not in generating text.

Why Software Engineering Dominates

At its core, the reason AI is “5% of the work” is that LLMs are general-purpose brains but not complete products. They need a skeleton, muscles, and a nervous system built from software engineering.

Think of AI as an engine.

-

On its own, it’s powerful but unusable.

-

To drive a car, you need tires, brakes, fuel systems, sensors, and safety features.

The same applies to AI agents: without engineering, the AI is just horsepower with no steering wheel.

The Future: Engineering-Centric AI Agent Development

Looking forward, several trends will continue to make AI agent building an engineering-first discipline:

-

Standardized Frameworks: More robust agent orchestration tools will emerge, reducing repetitive boilerplate.

-

Composable Infrastructure: Plug-and-play APIs, vector stores, and model endpoints will accelerate development.

-

AI-First DevOps: Specialized pipelines for testing, monitoring, and scaling AI systems will become standard.

-

Governance Layers: Policies, audit trails, and explainability systems will integrate into agent workflows.

In other words, AI agents will increasingly resemble traditional software products—just with smarter engines.

Conclusion

The hype around AI often oversimplifies the challenge: “Plug in an LLM and you’ve got an agent.” In reality, building robust AI agents is a software engineering marathon.

Yes, AI provides the reasoning core—the 5% that makes agents intelligent. But the 100% of work is everything else:

-

Designing architectures.

-

Building data pipelines.

-

Integrating tools.

-

Handling errors.

-

Securing systems.

-

Scaling infrastructure.

-

Embedding human oversight.

The lesson for developers, startups, and enterprises is clear: if you want to build AI agents that actually work, think less like an AI researcher and more like a software engineer.

AI may be the spark, but software engineering is the fire that makes agents truly come alive.

For quick updates, follow our whatsapp –

https://whatsapp.com/channel/0029VbAabEC11ulGy0ZwRi3j

https://bitsofall.com/https-yourdomain-com-google-ai-introduces-agent-payments-protocol-ap2/

https://bitsofall.com/https-yourdomain-com-google-ai-ships-timesfm-2-5/

Top Computer Vision (CV) Blogs & News Websites — 2025 edition