A Coding Guide to Implement Zarr for Large-Scale Data

Introduction

In the era of big data, researchers, developers, and data engineers often face a challenge: how to efficiently store, process, and analyze large datasets that cannot fit into memory or be handled by traditional file formats. This is where Zarr comes in.

Zarr is an open-source format for the storage of chunked, compressed, N-dimensional arrays. It is widely used in fields like genomics, geoscience, climate modeling, AI, and machine learning where massive datasets are the norm. Unlike monolithic formats such as HDF5, Zarr is designed with scalability, parallelism, and cloud-readiness in mind.

In this guide, we’ll explore Zarr in depth: its architecture, advantages, coding examples, and best practices to implement it for large-scale data workflows.

What is Zarr?

At its core, Zarr enables:

-

Chunked storage: Large arrays are split into smaller blocks (chunks). Each chunk can be read or written independently, enabling parallel access.

-

Compression: Each chunk can be compressed, reducing storage costs and speeding up I/O.

-

Flexibility: Works seamlessly with local storage, cloud object stores (AWS S3, GCP, Azure), and distributed file systems.

-

Compatibility: Integrates with libraries like Dask, xarray, and PyTorch.

Zarr stores both data chunks and metadata (array shape, dtype, chunk size, etc.). This separation makes it portable, scalable, and highly flexible for distributed computing.

Why Use Zarr for Large-Scale Data?

-

Scalability: Designed for terabytes or petabytes of array data.

-

Parallelism: Multiple processes or threads can read/write chunks simultaneously.

-

Cloud-native: Optimized for object storage like Amazon S3.

-

Language support: Works with Python, Java, C, and other languages.

-

Ecosystem integration: Compatible with Dask (for parallel computation) and xarray (for labeled data).

Installing Zarr

Before diving into coding, let’s install Zarr.

For cloud support:

For Dask integration:

Creating and Storing Arrays with Zarr

Let’s start with a simple example: creating a Zarr array and saving it to disk.

Key points:

-

chunks=(100, 100)means the data is stored in 100×100 tiles. -

Zarr writes metadata and chunk data separately.

-

You can access individual chunks without loading the whole dataset.

Reading Data from Zarr

Reading is just as simple:

This allows fast random access, as Zarr only loads the necessary chunks.

Using Compression in Zarr

Compression reduces storage footprint and speeds up I/O. Zarr supports compressors like Blosc, Zstd, LZMA, Gzip.

Here, Blosc with zstd compression ensures both performance and storage efficiency.

Hierarchical Storage: Zarr Groups

Zarr allows grouping arrays (like folders). This is useful for datasets with multiple variables.

This hierarchical approach mimics NetCDF/HDF5 but remains cloud-native.

Using Zarr with Dask for Parallel Processing

Zarr pairs naturally with Dask, enabling large-scale computations.

Here, Dask breaks the computation into tasks, processes chunks in parallel, and uses Zarr as efficient storage.

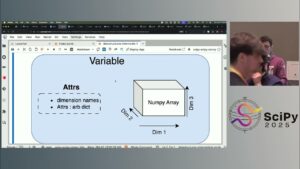

Zarr with Xarray

For labeled multi-dimensional datasets (climate, satellite, genomics), xarray + Zarr is powerful.

Xarray + Zarr is widely used in climate science (CMIP6), NASA Earth data, and genomics.

Storing Zarr Data in the Cloud

Zarr works seamlessly with object storage. Example with Amazon S3:

This makes Zarr cloud-ready, enabling shared access for collaborative research.

Scaling to Petabyte Data

To handle extremely large datasets:

-

Use chunking wisely: Align chunk size with expected access patterns.

-

Compression balance: Higher compression reduces storage but increases CPU cost.

-

Parallel I/O: Combine with Dask or Spark for distributed reads/writes.

-

Cloud storage: Store Zarr arrays on S3, GCS, or Azure Blob for scalability.

Best Practices for Zarr Implementation

-

Chunk size optimization: Aim for 1–10 MB per chunk for balance.

-

Use groups for organization: Store related variables under one dataset.

-

Leverage compression: Choose Blosc/Zstd for speed and efficiency.

-

Integrate with Dask/Xarray: For scalable analysis.

-

Use cloud storage for sharing: Enables global collaboration.

Real-World Use Cases

-

Climate modeling (CMIP6): Terabytes of simulation data stored in Zarr on the cloud.

-

Genomics: Human genome sequencing data stored as compressed arrays.

-

Astronomy: Telescope imagery and sky surveys stored in distributed Zarr datasets.

-

Machine Learning: Training data pipelines using Zarr for efficient loading.

Challenges and Limitations

-

Evolving standard: Zarr v3 is still being adopted.

-

Metadata overhead: For extremely small chunks, metadata can become excessive.

-

Interoperability: While widely supported, not all legacy tools support Zarr.

-

Learning curve: Users familiar with HDF5/NetCDF may need adjustment.

Future of Zarr

Zarr is growing rapidly, with adoption in scientific computing, AI pipelines, and data engineering. The upcoming Zarr v3 standard will improve:

-

Cross-language interoperability

-

Stronger metadata management

-

Improved cloud-native capabilities

It is on track to become a de-facto standard for large-scale array data.

Conclusion

Zarr provides an elegant solution to one of the most pressing challenges in modern data science: handling large, multidimensional datasets efficiently across local, distributed, and cloud environments.

By enabling chunking, compression, parallelism, and cloud-native storage, Zarr empowers researchers, engineers, and developers to work with terabyte-scale datasets without being bottlenecked by memory or I/O limitations.

Whether you’re building a machine learning data pipeline, processing satellite imagery, analyzing climate data, or handling genomics datasets, Zarr should be part of your toolkit.

For quick updates, follow our whatsapp –

https://whatsapp.com/channel/0029VbAabEC11ulGy0ZwRi3j

https://bitsofall.com/https-yourblog-com-moonshotai-released-checkpoint-engine/

https://bitsofall.com/https-yourdomain-com-google-ai-ships-timesfm-2-5/

OpenAI Introduces GPT-5-Codex: Redefining the Future of AI-Powered Coding