How to Design a Persistent Memory and Personalized Agentic AI System with Decay and Self-Evaluation

Introduction

The evolution of artificial intelligence is moving rapidly from static, prompt-driven systems toward autonomous, memory-embedded, agentic architectures. These new systems are not just tools that respond — they learn, remember, forget, and self-improve.

But for an AI agent to behave in a human-like and contextually adaptive way, it must possess persistent memory, the ability to forget (decay) irrelevant data, and the capacity for self-evaluation — assessing its own decisions and adjusting its behavior accordingly.

In this article, we’ll dive deep into how to design a persistent memory and personalized agentic AI system with decay and self-evaluation, covering the architecture, data flow, algorithmic components, and real-world implementation approaches.

1. Understanding the Core Concepts

Before we get into design details, let’s define the three key building blocks of our system.

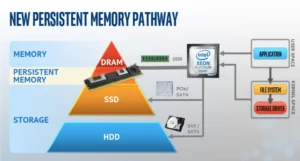

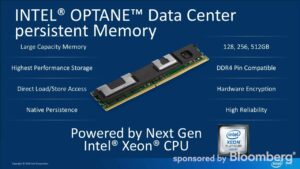

1.1 Persistent Memory

Persistent memory allows an AI agent to retain information across sessions. Unlike ephemeral context windows (like those in traditional LLMs), persistent memory stores data long-term — enabling agents to “remember” users, tasks, and emotional tones over time.

Persistent memory systems usually combine:

-

Short-term memory (STM): Active task context.

-

Long-term memory (LTM): Retained knowledge or experience.

-

Episodic memory: Contextual and temporal experiences (like “what happened when”).

-

Semantic memory: General knowledge derived from patterns over multiple experiences.

1.2 Memory Decay

Just as humans forget unimportant details to focus on what matters, AI agents must manage information overload.

Memory decay introduces a time-based or relevance-based forgetting mechanism that prunes data, optimizing storage and cognitive efficiency.

Common decay mechanisms include:

-

Time-based decay: Older entries gradually lose weight.

-

Relevance-based decay: Information rarely accessed or rated as low-importance fades.

-

Adaptive decay: Importance is determined dynamically based on user feedback or self-evaluation.

1.3 Self-Evaluation

A truly agentic AI must be able to evaluate its own performance — identifying errors, biases, or inefficiencies.

This mirrors human metacognition, the process of “thinking about thinking.”

Self-evaluation may include:

-

Task success metrics (Did I complete the user goal?)

-

Accuracy and truthfulness evaluation.

-

Behavioral consistency scoring (Am I aligned with user preferences?)

-

Emotional alignment assessment (Was my tone appropriate?)

2. Designing the System Architecture

Building a persistent, self-evaluating AI agent involves a layered architecture. Let’s break it down.

2.1 High-Level Architecture Overview

3. Memory System Design

The memory subsystem is the heart of the agent. It determines what is remembered, for how long, and why.

3.1 Data Representation

Each memory entry (or “chunk”) can be stored as a structured object:

The importance score is computed based on:

-

User feedback (explicit or implicit)

-

Task relevance

-

Frequency of recall

-

Temporal proximity

3.2 Memory Retrieval

When the agent is prompted, it uses semantic search to retrieve relevant memories using embeddings (via vector similarity). For example:

-

Recent tasks → Short-term retrieval

-

Preferences or facts → Long-term retrieval

-

Temporal context → Episodic retrieval

This dynamic recall system ensures personalization and context continuity.

3.3 Memory Decay Implementation

To simulate natural forgetting, apply a decay function:

effective_importance=I0×e−λt\text{effective\_importance} = I_0 \times e^{-λt}

Where:

-

I0I_0: Initial importance

-

λλ: Decay constant (tuned per category)

-

tt: Time since last access

If the effective importance drops below a threshold, the memory is archived or deleted.

You can also implement soft deletion — transferring decayed entries to a low-priority “archive” database.

4. Building Personalization into the Agent

Personalization transforms an AI agent from a static responder to a relationship-building assistant.

Here’s how to achieve this:

4.1 User Profile Layer

Each user gets a profile memory:

This profile helps the agent adapt tone, depth, and recommendations dynamically.

4.2 Contextual Adaptation

When interacting, the agent references the profile memory to modify:

-

Tone & vocabulary

-

Information depth

-

Task prioritization

-

Response format

For example, if a user prefers technical depth, the agent automatically provides code examples and references.

4.3 Cross-Session Consistency

Persistent memory ensures that:

-

Preferences remain stable.

-

Long-term goals are tracked.

-

Conversations feel “alive” and evolving.

5. Implementing Self-Evaluation

A robust agentic AI doesn’t just respond — it reflects.

Here’s how to integrate self-evaluation loops.

5.1 Reflection Triggers

Self-evaluation can be triggered:

-

Periodically (every few interactions)

-

After critical tasks

-

When feedback is received

-

When errors/conflicts are detected

5.2 Self-Evaluation Metrics

| Metric | Description | Example |

|---|---|---|

| Goal Completion | Did the output satisfy the user’s goal? | Task = “Summarize paper”, check completion confidence |

| Accuracy | Are facts correct and verifiable? | Use fact-checking via retrieval |

| Tone Match | Is the tone consistent with user preference? | NLP-based tone analysis |

| Self-Consistency | Are answers consistent across sessions? | Vector similarity between outputs |

| Confidence Scoring | How sure is the model of its response? | Derived from internal logits or evaluation model |

5.3 Reflection and Correction

After evaluation, the system can perform:

-

Self-reflection summarization:

The agent summarizes what went well or poorly. -

Behavioral adjustment:

Fine-tuning importance weights or preference profiles. -

Reinforcement through meta-learning:

Updating internal reward models.

Example pseudo-code:

6. Integrating Decay and Self-Evaluation Loops

The decay system and self-evaluation loop should interact dynamically.

6.1 Adaptive Decay Through Self-Evaluation

Self-evaluation can adjust decay parameters.

For example:

-

If a memory is repeatedly evaluated as useful, decay rate slows.

-

If it’s inaccurate or outdated, it decays faster.

This enables selective forgetting and maintains a balance between stability and adaptability.

6.2 The Cognitive Cycle

-

Perception: Receive new input.

-

Memory Retrieval: Recall relevant knowledge.

-

Reasoning: Generate output.

-

Evaluation: Assess performance.

-

Memory Update: Store insights and apply decay.

-

Optimization: Adjust parameters for next iteration.

This cyclical process mirrors human learning and introspection.

7. Implementation Technologies

To design a system like this, you can combine modern AI tools and frameworks.

7.1 Core Components

| Component | Tool/Framework | Purpose |

|---|---|---|

| Embeddings | OpenAI, SentenceTransformers | Memory vectorization |

| Vector DB | Pinecone, Weaviate, Chroma | Persistent storage |

| LLM Backbone | GPT-4o, Claude, Mistral, Llama 3 | Reasoning and generation |

| Orchestration | LangChain, LlamaIndex | Memory retrieval + planning |

| Feedback Loop | EvalAgent, trlx, custom evaluators | Self-evaluation logic |

| Database | PostgreSQL, Redis | Metadata + user profiles |

7.2 Example Stack

-

Frontend: Chat interface (React + WebSocket)

-

Backend: Python (FastAPI)

-

Memory System: Chroma DB + PostgreSQL hybrid

-

Evaluation Engine: LLM-based reflection model

-

Orchestration: LangGraph or LangChain Agent Executor

8. Ethical and Design Considerations

8.1 Privacy and Transparency

Persistent memory must comply with data privacy principles:

-

Explicit consent for data retention.

-

Clear deletion mechanisms.

-

Transparent feedback loops (e.g., “This information will help me improve future responses.”)

8.2 Avoiding Bias in Self-Evaluation

AI reflection should not reinforce its own biases.

Use external evaluators or ensemble models for self-evaluation to maintain objectivity.

8.3 Balancing Forgetting and Remembering

Too much decay makes the agent forget useful details; too little makes it bloated and inefficient.

Design adaptive thresholds and allow user control (“Forget my preferences”).

9. Future Directions

Designing persistent, self-evaluating AI systems opens the door to emergent consciousness-like behavior in artificial agents.

Here’s what’s next:

-

Meta-agents: Agents that monitor other agents’ performance.

-

Collective memory systems: Shared, multi-agent memory architectures.

-

Self-repairing cognitive networks: Agents that debug their reasoning pipelines.

-

Emotional simulation layers: Evaluating tone and empathy using affective computing.

The future of AI is not just about intelligence — it’s about self-awareness, context, and memory.

Conclusion

Designing a persistent memory and personalized agentic AI system with decay and self-evaluation represents a major leap toward autonomous, adaptive, and emotionally intelligent artificial beings.

By combining memory persistence, selective forgetting, and reflective evaluation, developers can build agents that evolve like humans — learning continuously, refining their behavior, and maintaining meaningful relationships with users over time.

In essence, this design philosophy turns AI from a reactive tool into a proactive digital companion — capable of growth, introspection, and self-correction.

For quick updates, follow our whatsapp –https://whatsapp.com/channel/0029VbAabEC11ulGy0ZwRi3j

Microsoft Releases Agent Lightning — the trainer that lights up AI agents