Comparing the Top 7 Large Language Models (LLMs/Systems) for Coding in 2025

TL;DR: If you want the most capable, general-purpose coding brain today, pick OpenAI GPT-5. If you need long-context repo work and agentic code fixes with strong web integration, Google Gemini 2.5 Pro is excellent. For thoughtful refactors and “reads your intent” style edits, Anthropic Claude 4.x (and 3.5 Sonnet) remains a favorite. If you prefer open-weight or self-hosted, Meta Llama 4 Maverick, Mistral Codestral 25.08, DeepSeek-V3.1 / DeepSeek-Coder, and Qwen2.5-Coder are the practical choices—with different trade-offs between cost, speed, and autonomy. Ollama+6OpenAI+6blog.google+6

Why this comparison matters in 2025

Code-capable LLMs have moved beyond autocomplete. The industry tests real software engineering ability with agentic benchmarks like SWE-bench Verified (can a model read an issue, edit the codebase, and pass tests?). We also care about latency, cost, context length, tool use (running code, package queries, filesystem), and ecosystem fit (IDEs, CI, security). swebench.com

Below, we review seven leaders you can actually use in production, summarize strengths/weaknesses, and suggest best-fit scenarios.

Selection criteria

-

Real engineering competence on agentic and synthesis tasks (e.g., SWE-bench, HumanEval, MBPP, internal agent evals). swebench.com

-

Context handling for monorepos and whole-project transformations.

-

Latency & cost for interactive editing and code review.

-

Tooling ecosystem (IDE plugins, DevEx, observability).

-

Deployment options (cloud, VPC, on-prem; licensing).

-

Safety/IP posture (code provenance controls, privacy). GitHub

The 7 models/systems

1) OpenAI GPT-5 (and the o3 lineage)

What it is: OpenAI’s flagship 2025 system. Positioned as the strongest coding model OpenAI has released, and widely integrated across editors and enterprise tools. OpenAI+1

Why developers like it

-

State-of-the-art coding in generation and repair; improved reasoning over GPT-4o/o3. OpenAI

-

Deep ecosystem presence: shows up in Cursor, GitHub Copilot, Azure AI, and more. Cursor+2The Verge+2

-

Strong few-shot and chain-of-thought behaviors that translate into better multi-file refactors and test scaffolding.

Notable signals

-

OpenAI’s developer notes emphasize GPT-5 outperforming o3 on coding benchmarks and agentic products. OpenAI

-

Cursor adopted GPT-5 day-one; engineers report large real-world productivity gains. Cursor+1

Best for: Teams wanting the highest raw capability, strong agent behaviors, and first-class IDE integrations.

2) Google Gemini 2.5 Pro

What it is: Google’s “thinking” model with long-context repo analysis and strong agentic coding chops. Google highlights substantial jumps on SWE-bench Verified and complex app creation. blog.google+1

Why developers like it

-

Excellent at repo-level tasks and code transformation; long context is handy for entire services or notebooks. blog.google

-

Native integration with Google developer tooling and the Gemini API; works well for multi-modal code tasks (UI screenshots to code, etc.). Google AI for Developers

Notable signals

-

Google reports 63.8% on SWE-bench Verified (with a custom agent setup). blog.google

Best for: Web/app builders in Google’s ecosystem who need long-context reasoning and reproducible agent pipelines.

3) Anthropic Claude 4.x and Claude 3.5 Sonnet

What it is: Anthropic’s thoughtful, cautious coding assistant line. Claude 3.5 Sonnet was a 2024 favorite; the Claude 4.x family continues the push in 2025. Anthropic+1

Why developers like it

-

Agentic coding: in Anthropic’s own internal evaluation, 3.5 Sonnet solved 64% of repo tasks, surpassing Claude 3 Opus; follow-ups keep improving. Anthropic

-

Known for clean diffs, safe refactors, and explanations that make code reviews easier.

Notable signals

-

Many comparisons early in 2025 put Claude neck-and-neck or ahead on classic coding sets; Sonnet remained a reliable “workhorse” until newer 4.x drops. Analytics Vidhya+1

Best for: Teams prioritizing stability, readable patches, and careful reasoning in PRs and design docs.

4) Meta Llama 4 Maverick / Scout (open-weight)

What it is: Meta’s 2025 open-weight family (MoE architecture) with improved multimodal and reasoning performance. Strong choice if you need self-hosting or VPC control. The Verge+2Barron’s+2

Why developers like it

-

Cost/performance balance; good for private deployments and internal codebases. The Verge

-

Healthy open ecosystem; integrates well with OSS agents and tooling.

Notable signals

-

Press and analyses highlight better coding/reasoning vs earlier Llama series, with Meta positioning Maverick as competitive with proprietary leaders. The Verge+1

Best for: Orgs that need open-weight control, on-prem or air-gapped deployments, and the ability to fine-tune with internal code.

5) Mistral Codestral 25.08 (and 25.01)

What it is: Mistral’s dedicated coding model and full coding stack (Devstral, IDE extension, embeddings), tuned for low-latency completions, FIM, and test generation. mistral.ai+2docs.mistral.ai+2

Why developers like it

-

Fast & inexpensive for day-to-day editing, autocomplete, and unit-test suggestions—great for inner-loop productivity. docs.mistral.ai

-

Enterprise-friendly rollout with IDE extensions and components for search and agent workflows. mistral.ai

Notable signals

-

January and July 2025 updates improved tokenizer/latency and rounded out an end-to-end coding stack. mistral.ai+1

Best for: Teams optimizing speed & cost in IDE loops, or assembling a modular, Mistral-based coding platform.

6) DeepSeek-V3.1 / DeepSeek-Coder family (open)

What it is: A high-capacity MoE line from DeepSeek; the Coder variants and V3.1 show notable jumps on agentic coding benchmarks with open weights. Hugging Face+1

Why developers like it

-

Strong open performance on SWE-bench Verified in agent mode; competitive with proprietary models for structured bug-fixing. Hugging Face

-

Open deployment paths with reasonable hardware footprints compared to very large closed models.

Notable signals

-

Reported 66% on SWE-bench Verified (agent mode) for DeepSeek-V3.1; earlier V2/V3 trailed but have improved rapidly. Hugging Face

Best for: Builders who want OSS transparency and credible agentic coding skills, especially in research or low-cost environments.

7) Alibaba Qwen2.5-Coder

What it is: An open-weight coder line (e.g., 32B) that performs well across many languages and repair tasks, with active OSS distribution. Ollama

Why developers like it

-

Balanced multilingual coding and strong McEval/MdEval scores reported by the community; practical to host locally. Ollama+1

-

Good choice as a private assistant across Python/JS + niche languages.

Best for: Teams prioritizing local deployment, multilingual projects, and robust performance without closed-model costs.

“Systems” angle: Copilot & editor ecosystems

Your model choice is only half the story. The system that wraps it—IDE, RAG, tests, run-tools—often determines real-world output. In 2025, GitHub Copilot exposes multiple premium models (including GPT-5, Claude 4.x, Gemini 2.5 Pro, and GPT-5-Codex), plus a smart mode in Microsoft Copilot that routes tasks to the best model for you. This matters because a routed system can auto-balance latency vs reasoning depth, and update the backend as models evolve. GitHub Docs+2GitHub Docs+2

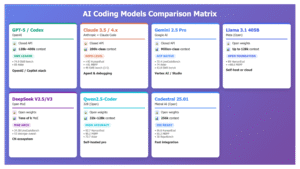

Head-to-head quick comparison

| Model/System | Best At | Context & Agent Strength | Ecosystem/Deploy | Cost Posture | When to Choose |

|---|---|---|---|---|---|

| GPT-5 | Tough bug-fixes, refactors, design-level changes | Excellent reasoning; strong agent behavior | First-class in Cursor, Copilot, Azure | Premium | You want the strongest generalist and top integrations. OpenAI+2Cursor+2 |

| Gemini 2.5 Pro | Repo-scale edits, web app builds, long-context | High on SWE-bench Verified with custom agent | Google AI Studio, Gemini API | Premium/varies | You need long context + Google ecosystem. blog.google+1 |

| Claude 4.x / 3.5 Sonnet | Clean diffs, safe refactors, explainability | Strong internal agentic evals | Anthropic Console, many IDEs | Premium | You value cautious, readable changes. Anthropic+1 |

| Llama 4 Maverick (open-weight) | Private/VPC coding assistants | Good reasoning/coding for OSS | Self-host, OSS stacks | Low–medium | You need control & fine-tuning. The Verge |

| Mistral Codestral 25.08 | Fast FIM, unit tests, inner loop | Tuned for low-latency coding tasks | IDE extension, Devstral, embeddings | Low–medium | You want speed & cost in IDE completions. docs.mistral.ai+1 |

| DeepSeek-V3.1 / Coder | Open agentic bug-fixing | Competitive SWE-bench Verified (agent mode) | Self-host/OSS | Low | You want open, strong agentic abilities. Hugging Face |

| Qwen2.5-Coder | Multilingual coding & repair | Solid community scores | Self-host/OSS | Low | You need local multilingual coding help. Ollama |

Practical buying guide (2025)

-

Fastest path to productivity (cloud, best-of-breed): Start with GPT-5 or Claude 4.x inside Copilot or Cursor. You’ll get robust diffs, test hints, and context handling with minimal setup. GitHub Docs+2The Verge+2

-

Long-context, repo-wide changes and code agents: Try Gemini 2.5 Pro with a structured agent harness; it’s particularly good at whole-project transformations and verification. blog.google

-

Open, private, and cost-controlled: Choose Llama 4 Maverick if you need open weights and strong reasoning. Pair with vector search + CI tests. For low-latency IDE loops, add Codestral 25.08. The Verge+1

-

Open-source with credible agentic results: DeepSeek-V3.1 is a standout, especially if you aim to reproduce SWE-bench Verified outcomes with your own agents. Qwen2.5-Coder is great for multilingual teams and local hosting. Hugging Face+1

Tips to get better code from any LLM/system

-

Give the model the repo, not just a file. Provide key files, tests, and build steps; link the failing test output.

-

Ask for diffs and tests. “Propose a minimal diff + unit test demonstrating the fix.”

-

Use tools. Let the system run the tests, grep, and build; this reduces hallucinations.

-

Enforce policies. Pre-commit hooks, security scanners, and license checks must run on LLM patches.

-

Adopt agent harnesses. Simple wrappers around SWE-bench-style flows (retrieve → plan → edit → test → iterate) can double real-world success, especially with Gemini/GPT-5/DeepSeek. swebench.com

The emerging 2025 picture

-

Convergence at the top: GPT-5, Gemini 2.5 Pro, and Claude 4.x form the “front row” for hard engineering work. Differences show up more in ecosystem fit and latency/cost than baseline competence. OpenAI+2blog.google+2

-

Open-weight renaissance: Llama 4 Maverick + DeepSeek-V3.1 narrow the gap for agentic repo fixes—good enough that many orgs can self-host without giving up too much quality. The Verge+1

-

Specialization matters: Codestral’s low-latency FIM and Qwen2.5-Coder’s multilingual coverage show that the “best” model depends on workflow shape (inner loop vs. PR review vs. agentic automation). docs.mistral.ai+1

Frequently asked quick picks

-

One model to rule them all (cloud): GPT-5. OpenAI

-

Agentic repo editing with long context: Gemini 2.5 Pro. blog.google

-

Most readable refactors & explanations: Claude 4.x / 3.5 Sonnet. Anthropic

-

Open-weight for VPC/self-host: Llama 4 Maverick or DeepSeek-V3.1. The Verge+1

-

Fast autocomplete + tests: Codestral 25.08. docs.mistral.ai

-

Local multilingual coding: Qwen2.5-Coder. Ollama

Final thoughts

In 2025, “best coding LLM” is contextual. If your top constraint is capability, go GPT-5. If it’s workflow fit (long context, automated verification), Gemini 2.5 Pro is compelling. If it’s explainability and safe refactors, Claude shines. If it’s sovereignty and cost, open-weight lines (Llama 4, DeepSeek, Qwen, plus Mistral Codestral for speed) deliver impressive ROI—especially when wrapped in a good agent harness and strong CI. docs.mistral.ai+5OpenAI+5blog.google+5

Sources & further reading

-

OpenAI on GPT-5 and coding performance; GPT-5 for developers. OpenAI+1

-

Google on Gemini 2.5 Pro coding and long-context repo work; model catalog. blog.google+1

-

Anthropic on Claude 3.5 Sonnet agentic coding results; 2025 round-ups referencing Claude 4.x. Anthropic+1

-

SWE-bench leaderboard and agentic coding ecosystem. swebench.com

-

Meta Llama 4 releases and coverage. The Verge+2Barron’s+2

-

Mistral Codestral updates and docs (25.01 / 25.08) and coding stack. mistral.ai+2mistral.ai+2

-

DeepSeek V3.1 evaluations & model card; DeepSeek-V3 repo. Hugging Face+1

-

Qwen2.5-Coder overview (open-weight). Ollama

-

GitHub Copilot supported models & model comparison; Microsoft Copilot GPT-5 rollout. GitHub Docs+2GitHub Docs+2

For quick updates, follow our whatsapp –https://whatsapp.com/channel/0029VbAabEC11ulGy0ZwRi3j

https://bitsofall.com/https-yourblogdomain-com-deepagent-unpacking-the-next-gen-ai-agent-revolution/

🧠 How to Create AI-Ready APIs: A Complete Developer’s Guide for 2025

How to Design a Persistent Memory and Personalized Agentic AI System with Decay and Self-Evaluation