How an AI Agent Chooses What to Do Under Tokens, Latency, and Tool-Call Budget Constraints

Artificial Intelligence agents don’t operate in a world of infinite resources. Every real-world AI agent—whether it’s powering a chatbot, automating business workflows, or coordinating multi-agent systems—must constantly make decisions under strict constraints. Tokens cost money, latency impacts user experience, and tool calls are limited by both budgets and system rules.

Yet despite these limits, modern AI agents often feel intelligent, decisive, and even strategic. How?

This article takes a deep dive into how AI agents decide what to do when faced with competing goals and hard constraints like token budgets, latency requirements, and tool-call limits. We’ll explore internal reasoning loops, trade-offs, planning heuristics, and real-world design patterns used in production-grade AI systems.

Why Constraints Matter in AI Agent Decision-Making

At first glance, constraints may sound like a technical inconvenience. In reality, they are core to intelligence.

Human decision-making is also shaped by limits—time, energy, attention. AI agents are no different. Without constraints, agents would:

-

Overthink trivial tasks

-

Call tools unnecessarily

-

Generate excessively verbose responses

-

Become slow, expensive, and impractical

Modern AI agents are designed not just to reason, but to reason efficiently.

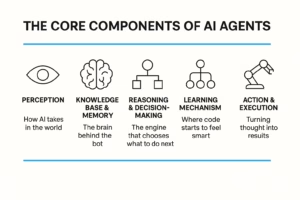

The Three Core Constraints Every AI Agent Faces

Before understanding how agents choose actions, we need to understand what they’re optimizing against.

1. Token Budget Constraints

Tokens represent the thinking and communication currency of large language models.

Every agent must manage:

-

Input tokens (context, memory, prompts)

-

Internal reasoning tokens (chain-of-thought or hidden reasoning)

-

Output tokens (final response)

Exceeding token limits means:

-

Truncated context

-

Loss of memory

-

Higher cost

-

Slower performance

Agents must constantly decide:

“Is this thought worth spending tokens on?”

2. Latency Constraints

Latency is about how fast the agent must respond.

Some environments demand:

-

Sub-second replies (voice assistants, real-time UX)

-

Low jitter (customer support bots)

-

Predictable response times (enterprise workflows)

Latency pressure forces agents to:

-

Skip deep planning

-

Reduce tool calls

-

Prefer heuristics over exhaustive reasoning

3. Tool-Call Budget Constraints

Tools—APIs, databases, search engines, code interpreters—are powerful but costly.

Each tool call:

-

Adds latency

-

Increases failure risk

-

May incur monetary cost

-

Often has rate limits

Agents must choose:

-

Which tool to call

-

When to call it

-

Whether to call it at all

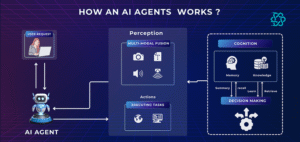

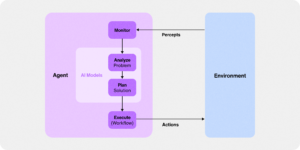

The Agent Decision Loop: Think → Decide → Act

At a high level, AI agents operate in a decision loop.

Step 1: Task Interpretation

The agent first evaluates:

-

Task complexity

-

Required accuracy

-

Time sensitivity

-

External dependencies

This stage determines whether the agent should:

-

Answer directly

-

Plan internally

-

Use tools

-

Decompose the task

Step 2: Constraint-Aware Planning

The agent implicitly or explicitly estimates:

-

Remaining token budget

-

Acceptable latency window

-

Available tool calls

This forms a resource envelope within which the agent must operate.

Step 3: Action Selection

The agent chooses the best next action:

-

Generate text

-

Call a tool

-

Ask a clarification

-

End the task

This choice is probabilistic, heuristic-driven, and constraint-aware.

How AI Agents Reason Under Token Constraints

Token scarcity fundamentally changes how an agent thinks.

Shallow vs Deep Reasoning

When token budgets are tight, agents:

-

Use compressed reasoning

-

Avoid long chains of thought

-

Rely on learned patterns

When tokens are plentiful, agents:

-

Explore alternatives

-

Perform step-by-step planning

-

Self-check outputs

This leads to adaptive reasoning depth.

Context Pruning and Memory Compression

Agents often:

-

Summarize prior context

-

Drop low-relevance details

-

Compress memory into embeddings

This mirrors human note-taking—we don’t remember everything, only what’s useful.

Early Stopping Heuristics

Agents are trained to stop reasoning when:

-

Confidence crosses a threshold

-

The task appears trivial

-

Marginal utility of further thinking is low

In simple terms:

“Good enough is better than perfect.”

How Latency Shapes Agent Behavior

Latency constraints push agents toward speed over optimality.

Fast-Path vs Slow-Path Decisions

Many agents have two modes:

-

Fast-path: Direct response, minimal reasoning

-

Slow-path: Planning, tools, verification

Latency-sensitive tasks default to fast-path unless risk is high.

Parallel Reasoning and Speculation

To save time, agents may:

-

Predict likely tool results

-

Draft partial responses while waiting

-

Choose the most probable action early

This speculative execution improves responsiveness but increases error risk.

User Perception as a Latency Constraint

Humans perceive delays differently:

-

<300 ms feels instant

-

2 seconds feels slow

-

5 seconds feels broken

Agents optimize not just for actual latency, but perceived latency.

Tool-Call Budgeting: When Should an Agent Use Tools?

Tool usage is where agent intelligence becomes most visible.

Cost–Benefit Analysis of Tool Calls

Before calling a tool, agents implicitly estimate:

-

Probability the tool improves answer quality

-

Expected latency

-

Risk of failure

-

Token overhead

If the benefit doesn’t clearly outweigh the cost, the agent skips the tool.

Tool Deferral Strategies

Agents often:

-

Attempt an approximate answer first

-

Call tools only if confidence is low

-

Cache previous tool outputs

This mirrors human behavior—we Google only when unsure.

Tool Chaining Limits

Complex tasks may require multiple tools, but agents:

-

Cap chain length

-

Abort early on partial success

-

Fall back to best-effort answers

This avoids infinite loops and runaway costs.

Priority Heuristics: How Agents Choose What Matters Most

Agents rank actions using learned heuristics such as:

-

Expected value: How much does this improve the result?

-

Risk reduction: Does this prevent a bad outcome?

-

User intent clarity: Is more info needed?

-

Constraint pressure: Are we running out of budget?

These heuristics are not hard-coded rules—they emerge from training on massive decision datasets.

Planning vs Reactivity: The Core Trade-Off

Under tight constraints, agents become reactive.

Under loose constraints, agents become deliberative.

| Scenario | Agent Behavior |

|---|---|

| Chat response | Reactive |

| Code generation | Semi-planned |

| Autonomous workflows | Highly planned |

| Multi-agent coordination | Strategic |

Constraint-aware agents shift smoothly between these modes.

Failure Handling Under Constraints

When resources run low, agents must degrade gracefully.

Common Degradation Strategies

-

Shorter answers

-

Reduced explanation

-

Fewer examples

-

Partial task completion

Good agents fail informatively, not silently.

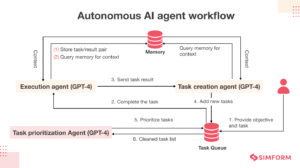

Multi-Agent Systems: Shared Constraints, Shared Decisions

In multi-agent setups:

-

Budgets are often shared

-

Agents specialize tasks

-

Tool calls are delegated

This allows systems to stay efficient even as complexity grows.

Why This Matters for Real-World AI Applications

Understanding constraint-driven decision-making helps you:

-

Design better agent prompts

-

Choose appropriate latency targets

-

Optimize tool integration

-

Reduce costs without sacrificing quality

In short, constraints don’t weaken AI agents—they shape intelligence.

The Future: Smarter Constraint-Aware Agents

Next-generation agents will:

-

Dynamically adjust reasoning depth

-

Learn personalized latency preferences

-

Predict tool utility more accurately

-

Optimize across long-term budgets

The most powerful agents won’t be the ones that think the most—but the ones that think just enough.

Final Thoughts

AI agents don’t choose actions in a vacuum. Every decision is shaped by tokens, time, and tools.

What looks like intuition is really:

-

Probabilistic reasoning

-

Budget-aware planning

-

Experience distilled into heuristics

Understanding this makes it clear:

Artificial intelligence isn’t about unlimited thinking—it’s about intelligent restraint.

For quick updates, follow our whatsapp –https://whatsapp.com/channel/0029VbAabEC11ulGy0ZwRi3j

https://bitsofall.com/what-are-context-graphs/

https://bitsofall.com/microsoft-releases-vibevoice-asr-deep-dive/

Apple Siri Overhaul — what’s changing, why it matters, and what to expect

What Is Clawdbot? A Deep Dive Into the AI-Powered Robotic Worker