How to Design an Agentic AI Architecture with LangGraph and OpenAI Using Adaptive Deliberation, Memory Graphs, and Reflexion Loops

Agentic AI is no longer just about chatbots answering questions. The new frontier is systems that think, plan, remember, reflect, and adapt over time. From autonomous research assistants to multi-step business automation agents, the core challenge is no longer model intelligence alone — it’s architecture.

This article walks you through how to design a modern agentic AI architecture using LangGraph and OpenAI, focusing on three powerful concepts:

-

Adaptive Deliberation – agents that decide how much to think

-

Memory Graphs – structured, long-term, relational memory

-

Reflexion Loops – self-critique and learning from mistakes

By the end, you’ll understand how these pieces fit together, why LangGraph is uniquely suited for this job, and how to build scalable, production-ready agentic systems.

What Is Agentic AI (Beyond the Buzzword)?

Agentic AI refers to systems that can:

-

Set or accept goals

-

Plan multi-step actions

-

Interact with tools and environments

-

Evaluate outcomes

-

Adapt behavior over time

Unlike prompt-based LLM applications, agentic systems are stateful, iterative, and decision-driven.

Traditional LLM pipelines look like this:

Input → Prompt → Output

Agentic pipelines look like this:

Observe → Think → Decide → Act → Reflect → Remember → Repeat

This shift demands a new architectural approach — and this is where LangGraph shines.

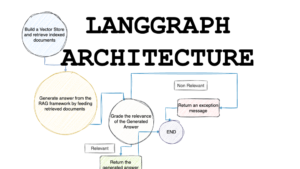

Why LangGraph Is Built for Agentic Architectures

LangGraph is an extension of LangChain designed specifically for stateful, cyclic, multi-agent workflows.

Key strengths of LangGraph:

-

Graph-based execution instead of linear chains

-

Persistent state across steps

-

Conditional branching and loops

-

Explicit control over agent reasoning flow

In other words, LangGraph lets you design how an agent thinks, not just what it says.

High-Level Architecture of an Agentic AI System

Before diving into details, let’s look at the conceptual architecture:

Core Components

-

LLM Reasoning Core (OpenAI models)

-

State Graph (LangGraph)

-

Adaptive Deliberation Controller

-

Memory Graph (short-term + long-term)

-

Tool Interface Layer

-

Reflexion & Self-Evaluation Loop

These components work together to create an agent that is context-aware, self-correcting, and goal-driven.

Step 1: Designing the Agent State with LangGraph

Everything in LangGraph revolves around state.

A typical agent state may include:

-

Current goal or task

-

Conversation history

-

Working memory

-

Retrieved long-term memories

-

Tool outputs

-

Self-evaluation signals

Why State Matters

Without explicit state:

-

Agents forget past decisions

-

Reflection is impossible

-

Memory becomes flat and unstructured

LangGraph treats state as a first-class citizen, enabling agents to evolve over time rather than restarting every turn.

Step 2: Adaptive Deliberation — Teaching Agents How Much to Think

Not every task deserves deep reasoning.

A simple query like “Convert 10 USD to INR” should not trigger chain-of-thought reasoning. But “Design a go-to-market strategy” absolutely should.

What Is Adaptive Deliberation?

Adaptive deliberation allows an agent to dynamically decide:

-

Whether to respond immediately

-

Or enter a deeper planning and reasoning loop

How It Works Conceptually

-

Agent receives input

-

A deliberation gate evaluates task complexity

-

Based on signals (ambiguity, risk, novelty), the agent:

-

Responds directly or

-

Activates deeper reasoning nodes

-

Why This Matters

-

Reduces latency and cost

-

Improves reliability on complex tasks

-

Prevents overthinking trivial requests

LangGraph enables this naturally through conditional edges that route execution paths based on model outputs.

Step 3: Planning and Action Nodes

Once deliberation is triggered, the agent enters a planning phase.

Typical Planning Steps

-

Break goal into sub-tasks

-

Decide tool usage

-

Set intermediate checkpoints

In LangGraph, this might look like:

-

Planner Node → generates action plan

-

Executor Node → carries out steps

-

Verifier Node → checks correctness

This separation mirrors how humans work:

Think → Do → Check

Step 4: Memory Graphs — Moving Beyond Chat History

Most AI applications treat memory as a flat conversation log. This is a major limitation.

What Is a Memory Graph?

A memory graph stores information as entities and relationships, not raw text.

Examples:

-

User → prefers → concise explanations

-

Project → depends on → LangGraph

-

Error → caused by → incorrect API usage

Why Graph-Based Memory Is Powerful

-

Enables semantic retrieval

-

Supports reasoning over relationships

-

Scales better than long context windows

-

Allows selective recall instead of full replay

Memory Types in an Agentic System

-

Short-Term Memory

-

Current task context

-

Temporary variables

-

-

Long-Term Memory

-

User preferences

-

Past successes and failures

-

Learned heuristics

-

-

Episodic Memory

-

Complete task trajectories

-

Used for reflection and learning

-

LangGraph allows memory nodes to be explicit parts of the execution graph, not hidden side effects.

Step 5: Retrieval-Augmented Memory Access

Memory graphs become powerful when paired with retrieval mechanisms.

Before planning or acting, the agent can:

-

Query relevant past experiences

-

Fetch similar task outcomes

-

Recall known constraints

This avoids:

-

Repeating mistakes

-

Reinventing solutions

-

Hallucinating known facts

In practice, this often combines:

-

Vector search

-

Graph traversal

-

LLM-based memory summarization

Step 6: Reflexion Loops — Teaching Agents to Learn from Mistakes

Reflexion is one of the most important ideas in modern agent research.

What Is Reflexion?

Reflexion is a structured self-critique loop where an agent:

-

Evaluates its own output

-

Identifies errors or inefficiencies

-

Updates memory with lessons learned

-

Tries again with improved strategy

Example Reflexion Prompt

-

What went wrong?

-

Why did it fail?

-

What should be done differently next time?

Why Reflexion Is Game-Changing

Without reflexion:

-

Agents repeat the same mistakes

-

Performance plateaus quickly

With reflexion:

-

Agents improve over time

-

Learning happens within deployment

-

Systems become more reliable and aligned

LangGraph enables reflexion naturally using cyclic edges, something linear chains struggle with.

Step 7: Tool Use as First-Class Actions

Agentic systems are only as powerful as their tools.

Common tools include:

-

Web search

-

Code execution

-

Databases

-

APIs

-

Internal business systems

Best Practices for Tool Integration

-

Treat tools as actions, not prompts

-

Validate tool outputs before trusting them

-

Log tool usage for reflexion analysis

LangGraph’s structure allows tools to be inserted at precise points in the reasoning loop, avoiding uncontrolled tool sprawl.

Step 8: Multi-Agent Coordination (Optional but Powerful)

Advanced systems often involve multiple specialized agents, such as:

-

Planner agent

-

Research agent

-

Critic agent

-

Executor agent

LangGraph supports this via:

-

Shared state

-

Role-specific nodes

-

Message passing between agents

This mirrors human teams and dramatically improves performance on complex tasks.

Step 9: Safety, Guardrails, and Alignment

Agentic AI must be bounded.

Key guardrails include:

-

Tool usage limits

-

Cost and time budgets

-

Safety filters on actions

-

Human-in-the-loop checkpoints

Adaptive deliberation helps here too — risky tasks can automatically escalate to deeper reasoning or human review.

Putting It All Together: A Reference Architecture

A production-ready agentic AI system typically looks like this:

-

Input arrives

-

Deliberation gate evaluates complexity

-

Relevant memories are retrieved

-

Planner constructs an action plan

-

Executor performs steps with tools

-

Verifier checks results

-

Reflexion loop analyzes outcomes

-

Memory graph is updated

-

Final response is delivered

This is not a chatbot — it’s a thinking system.

Common Mistakes When Building Agentic Architectures

-

Treating agents as glorified prompts

-

Ignoring memory structure

-

Overusing chain-of-thought

-

Skipping reflection mechanisms

-

Hardcoding reasoning paths

LangGraph exists specifically to help avoid these pitfalls.

The Future of Agentic AI

We are moving toward:

-

Long-horizon agents

-

Self-improving systems

-

Collaborative AI teams

-

Persistent digital workers

Architectures built with LangGraph, OpenAI models, adaptive deliberation, memory graphs, and reflexion loops are foundational to this future.

Final Thoughts

Designing agentic AI is less about clever prompts and more about system design.

By combining:

-

LangGraph’s stateful graphs

-

OpenAI’s reasoning-capable models

-

Adaptive deliberation

-

Graph-based memory

-

Reflexion-driven learning

you can build AI systems that don’t just respond — they reason, adapt, and improve.

Agentic AI isn’t a single breakthrough.

It’s an architectural evolution — and LangGraph is one of the best tools we have to build it.

For quick updates, follow our whatsapp –

https://whatsapp.com/channel/0029VbAabEC11ulGy0ZwRi3j

https://bitsofall.com/recursive-language-models-rlms/

https://bitsofall.com/ai-interview-series/

TII Abu Dhabi Released Falcon H1R-7B — a compact reasoning model that punches above its weight

Tiny AI Models: How Small Is the New Big Thing in Artificial Intelligence?