vLLM vs TensorRT-LLM vs Hugging Face TGI vs LMDeploy

A Deep Technical Comparison for Production LLM Inference

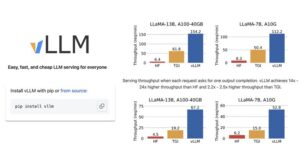

TL;DR: If you need the fastest, NVIDIA-centric, kernel-level optimizations for large models on NVIDIA GPUs, TensorRT-LLM is the heavyweight pick. If you want a general-purpose, production-ready Python server that balances throughput and ease-of-use with advanced scheduling, vLLM is a strong contender. Hugging Face TGI (Text Generation Inference) is ideal when you need multi-backend flexibility, tight integration with the HF ecosystem and a hardened gRPC/Rust server for inference endpoints. LMDeploy is an aggressive performance/feature project focused on compression, persistent/continuous batching and pragmatic kernel improvements — it can beat vLLM in some benchmarks but has different tradeoffs around maturity and ecosystem. GitHub+3GitHub+3GitHub+3

Introduction — why this comparison matters

Deploying LLMs to production isn’t only “model selection” — it’s choosing the right inference stack. Latency, throughput, memory footprint, hardware support, quantization options, batching strategy, and operational ergonomics (APIs, monitoring, autoscaling) all interact. The four projects compared here—vLLM, TensorRT-LLM, Hugging Face Text Generation Inference (TGI), and LMDeploy—represent different design philosophies: general-purpose serving, vendor-optimized compilation and kernels, production-grade multi-backend serving, and aggressive compression/throughput engineering respectively. Below I’ll compare them across architecture, performance optimizations, model & hardware support, quantization and memory handling, batching & concurrency, integration & ops, maturity & community, and recommended use cases.

1) Architectural overview

vLLM

vLLM is a Python/C++ hybrid inference engine focused on high throughput and memory efficiency for auto-regressive generation. It was born in academic/industry collaboration and emphasizes scheduler and IO improvements to feed GPUs efficiently while minimizing memory copy overheads. vLLM provides a Python API and integrates with Hugging Face transformers and tokenizers for easy adoption. GitHub+1

TensorRT-LLM

NVIDIA’s TensorRT-LLM is part of the broader TensorRT ecosystem. It compiles models to optimized TensorRT engines, replacing many kernels with vendor-tuned implementations (custom attention, fused ops) and supports advanced runtime techniques (speculative decoding, paged KV cache, inflight batching). It targets the deepest GPU stack optimization and is often paired with Triton or custom runtimes. GitHub+1

Hugging Face TGI (Text Generation Inference)

TGI is a Rust + Python + gRPC server maintained by Hugging Face for production inference. Its selling points are: production readiness, multi-backend support (including integrations with vLLM and TensorRT-LLM), model compatibility with HF Hub, and a robust API for endpoints used by HF Inference. TGI sits more as a “unified server” that can dispatch to different backends depending on requirements. GitHub+1

LMDeploy

Developed primarily in the InternLM / MMDeploy ecosystem, LMDeploy is a toolkit for compressing, optimizing and serving LLMs. It emphasizes aggressive kernel optimizations, continuous/persistent batching, and custom CUDA kernels to push throughput on a range of NVIDIA GPUs; some claims indicate it can outperform vLLM in specific benchmarks. LMDeploy includes tools for model quantization and deployment pipelines. GitHub+1

2) Performance optimizations: what each stack brings

-

vLLM

-

Efficient prefill/prefetch pipelines, memory-efficient KV cache layout, and a scheduler tuned for high throughput and short tail latency. It includes speculative decoding support via companion libraries (speculators). vLLM is engineered to serve many concurrent requests with good latency/throughput tradeoffs. vLLM

-

-

TensorRT-LLM

-

Compiler + kernel fusion, highly optimized custom attention kernels, quantization support (FP8, FP4, INT8, advanced AWQ/SmoothQuant flows), paged KV caching to manage large contexts on limited GPU memory, and speculative decoding. This is the most “low-level” performance work — replace large parts of the compute graph with vendor-tuned kernels for maximal GPU utilization. GitHub+1

-

-

TGI

-

Gains come from flexible backend selection and efficient Rust server runtime. TGI leverages backend-specific optimizations (e.g., using vLLM/TensorRT when configured) and focuses on throughput via batch scheduling and warmup strategies, while exposing a production API. GitHub+1

-

-

LMDeploy

-

Persistent/continuous batching, blocked KV cache, dynamic split & fuse, tensor parallel kernels, and specialized CUDA kernels. It’s explicitly built for throughput and claims real-world performance improvements over vLLM on some hardware in their benchmarks. PyPI+1

-

3) Model, tokenizer and ecosystem support

-

Model formats:

TensorRT-LLM wants models convertible to TensorRT engines (often via ONNX or bespoke conversion). vLLM/TGI/LMDeploy work primarily with PyTorch/HF model formats; vLLM and TGI have tight Hugging Face compatibility out of the box. LMDeploy includes conversion tools and compression pipelines. GitHub+1 -

Tokenizers & tokenizer speed:

vLLM and TGI use HF tokenizers; TGI aims for endpoint stability with tokenizer consistency. TensorRT-LLM deployments often keep tokenization in Python or host side and pass token IDs to the engine. LMDeploy provides tooling to ensure tokenization/packing matches optimized kernels. -

Multi-modal & emerging models:

TensorRT LLM is extending support into multimodal kernels and vendor accelerators. vLLM and LMDeploy are expanding to NPUs (TPU, Gaudi, Ascend) via adapters and community drivers; TGI’s multi-backend architecture makes it sensible for mixed ecosystems. vLLM+1

4) Quantization, mixed precision and memory handling

-

TensorRT-LLM: supports many quantization flows (FP8, FP4, INT8, AWQ variants) and provides tooling to compile quantized engines. Its precise hardware knowledge enables aggressive quantization with minimal accuracy loss. GitHub

-

vLLM: supports 8-bit / 4-bit quantized model runtimes via integrations (bitsandbytes, etc.) and handles memory layout optimizations for KV caching that reduce working set. It’s conservative about accuracy vs throughput tradeoffs compared to vendor compilers. vLLM

-

TGI: acts as the orchestrator — you can plug in quantized backends or non-quantized backends. For example, TGI can route requests to a TensorRT engine for quantized inference or to vLLM for FP16/FP32 runs. Hugging Face

-

LMDeploy: explicit focus on compression and quantization pipelines; supports mixed precision and offers optimizations like blocked KV caches and split/fuse strategies to keep larger contexts in memory. Their benchmarks emphasize throughput gains after applying these techniques. PyPI

5) Batching, concurrency and latency behavior

-

vLLM: dynamic batching and scheduler tuned for many concurrent short requests; good tail latency with steady throughput. Ideal when many users send short prompts or conversational traffic. vLLM

-

TensorRT-LLM: shines under both large batch offline/inference workloads and for powerful single-request low-latency using speculative decoding. It supports inflight batching and paged KV cache to amortize memory and compute. Best when you can exploit GPU throughput with larger batches or compiled kernels. GitHub

-

TGI: leverages server-side batch formation and can use vLLM/TensorRT’s batching under the hood. Because TGI is a server with gRPC support and a production API, it’s straightforward to integrate into autoscaling architectures and gateway patterns. GitHub

-

LMDeploy: persistent (continuous) batching is one of its unique claims — keep batching across requests to nearly full GPU saturation for higher throughput. This improves overall TPS at the cost of some request-level latency variability. Good when throughput is king. PyPI

6) Hardware & platform support

-

Best on NVIDIA: TensorRT-LLM (by design) and LMDeploy (optimized CUDA kernels).

-

Multi-accelerator: vLLM has community adapters for Ascend, HPU, and TPU inference flows; TGI aims to be multi-backend and thus supports different runtimes depending on the selected backend. LMDeploy focuses on NVIDIA primarily but also integrates with other toolchains via conversion. vLLM+1

7) Integration, APIs, and ops friendliness

-

vLLM: Pythonic API, easy HF integration, good for teams who want to iterate quickly and deploy with Python toolchains and Kubernetes. Also has recipes and a growing docs site. vLLM

-

TensorRT-LLM: requires model conversion and more ops work. It’s operationally heavier (building engines, managing Triton/containers), but integrates well into NVIDIA-first infra and is compatible with Triton and custom runtimes. Ops teams get excellent telemetry and resource utilization but must own more complexity. NVIDIA GitHub Pages

-

TGI: provides a production server (Rust) with gRPC/HTTP, model loading abstractions, and multi-backend plumbing making it easy to expose consistent endpoints. Good for organizations that want HF ecosystem integration with less backend plumbing. GitHub

-

LMDeploy: includes end-to-end pipelines for compression → conversion → serving. It’s practical for teams focused on squeezing more TPS out of commodity NVIDIA hardware and who accept some integration complexity. LMDeploy

8) Maturity, community & support

-

TensorRT-LLM: backed by NVIDIA — good docs, commercial support, and active development. Best for enterprises invested in NVIDIA stacks. GitHub

-

vLLM: active OSS community, growing adoption, official docs, and frequent releases. Balanced maturity for many production systems. GitHub+1

-

TGI: Hugging Face maintains it and has production usage (Hugging Chat, Inference API). The advantage: close ties to model hub and enterprise usage patterns. There are active issues and community discussion; multi-backend support is evolving. GitHub+1

-

LMDeploy: rapidly evolving, strong in China/InternLM community and contributors; some performance claims are impressive but verify with your own benchmarks. Releases and docs are active; ecosystem maturity is improving. GitHub+1

9) Real-world tradeoffs & recommended choices

-

If you’re NVIDIA-first and need absolute top throughput + quantization: TensorRT-LLM. Best when you can invest engineering time to convert, compile and manage TensorRT engines. Use this for latency-sensitive, high-throughput production where GPU utilization matters. GitHub

-

If you want a Pythonic, fast, memory-efficient server that’s easy to adopt: vLLM. Great for conversational agents, multi-tenant services, or when you want to stay in the HF/PyTorch ecosystem. vLLM

-

If you need a production API with multi-backend flexibility and HF Hub integration: Hugging Face TGI. Use it when you want a stable gRPC/HTTP interface and may need to switch backends (e.g., start on vLLM, later use TensorRT engines). GitHub+1

-

If you’re pushing for maximum TPS with advanced compression and are comfortable with bleeding-edge toolchains: LMDeploy. Consider LMDeploy for throughput-first deployments, but validate accuracy after compression/quantization. PyPI

10) Practical checklist for choosing & benchmarking

-

Define SLOs: latency (p50/p95/p99), throughput, and cost (GPU utilization).

-

Model size & context: very large models + long contexts may favor paged KV & compiler support (TensorRT or LMDeploy).

-

Quantization needs: if you need FP8/FP4/INT4 with vendor tooling, TensorRT gives the strongest path.

-

Ecosystem fit: if you’re already on HF, vLLM or TGI reduce friction.

-

Run A/B benchmarks: run your real prompts across stacks (warmup, cold starts, multi-tenant). Don’t trust raw single-metric claims — measure p99 latency under realistic concurrency.

-

Operationalize: consider autoscaling, observability (GPU metrics, batching stats), and model update workflows. TGI and TensorRT+Triton have mature endpoint patterns; vLLM and LMDeploy require some infra glue but are scriptable. Hugging Face+1

11) Caveats, gotchas and future directions

-

Benchmarks are contextual. Public claims (throughput ×, “beats vLLM”) are often hardware, model and precision dependent — always benchmark with your workload. Many projects publish selective benchmarks; interpret them carefully. PyPI

-

Ecosystem churn. TGI’s multi-backend initiative and continuous updates across all four projects mean APIs/features evolve — revalidate before large migrations. Hugging Face

-

Speculative decoding, paged KV and kernel fusion are active research/engineering fronts; they can yield big gains but add complexity (correctness under edge cases, debugging). TensorRT-LLM is particularly advanced here. GitHub

Conclusion — how to pick in one sentence

-

Choose TensorRT-LLM if you need vendor-level GPU kernel optimizations and maximal throughput/quantization on NVIDIA hardware.

-

Choose vLLM if you want fast adoption, strong throughput with fewer ops changes and great Hugging Face compatibility.

-

Choose Hugging Face TGI if you require a production API, multi-backend flexibility and easy HF Hub integration.

-

Choose LMDeploy when you want aggressive throughput/ compression optimizations and are willing to manage a more experimental stack — but always benchmark for your model, hardware and traffic patterns. PyPI+3GitHub+3vLLM+3

For quick updates, follow our whatsapp –https://whatsapp.com/channel/0029VbAabEC11ulGy0ZwRi3j

Focal Loss vs Binary Cross-Entropy: A Complete, In-Depth Comparison for 2025