🧠 How to Create AI-Ready APIs: A Complete Developer’s Guide for 2025

Introduction

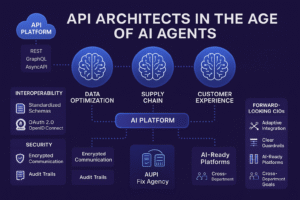

Artificial intelligence is reshaping how APIs are built, consumed, and evolved. Traditional APIs — once designed merely to connect data and services — are now evolving into AI-ready APIs capable of understanding, predicting, adapting, and even collaborating with other intelligent systems.

In 2025, as enterprises deploy autonomous AI agents, predictive models, and real-time decision systems, APIs must be engineered not only for data access but also for intelligence integration. This guide explores how to design, build, and deploy AI-ready APIs that can seamlessly connect with machine learning models, large language models (LLMs), and agentic systems.

1. Understanding What Makes an API “AI-Ready”

Before jumping into implementation, it’s crucial to define what “AI-ready” means in the context of API design.

An AI-ready API is one that:

-

Interfaces intelligently with machine learning models or LLMs.

-

Supports structured and unstructured data interchange (e.g., JSON, embeddings, or vectorized data).

-

Handles asynchronous, high-performance workloads for inference.

-

Provides explainability and audit trails for model predictions.

-

Integrates seamlessly into AI pipelines such as MLOps or agent frameworks.

In essence, an AI-ready API isn’t just a REST or GraphQL endpoint — it’s a data-aware interface designed for learning systems.

2. The Shift from Traditional APIs to Intelligent APIs

Traditional APIs have been CRUD-focused (Create, Read, Update, Delete). AI-ready APIs go beyond that — they’re designed for learning, contextual reasoning, and adaptive behavior.

| Feature | Traditional API | AI-Ready API |

|---|---|---|

| Purpose | Data access | Data + inference + decision |

| Data type | Structured (JSON/XML) | Structured + Unstructured (text, embeddings, images) |

| Response | Static | Dynamic and context-dependent |

| Integration | Client-Server | Multi-agent or model-driven |

| Monitoring | Uptime/Latency | Accuracy, Drift, and Bias |

| Scaling | Stateless | Model-dependent, GPU-accelerated |

This evolution means developers need to think beyond REST routes and focus on AI lifecycle management — from dataset ingestion to real-time model evaluation.

3. Architecture of an AI-Ready API

A well-designed AI-ready API architecture balances performance, scalability, and model integration. Here’s a conceptual breakdown:

🔹 Key Components

-

API Gateway

Handles authentication, rate limiting, and request routing. AI gateways also perform model selection and contextual routing (choosing the right model per request). -

Model Router

Directs inference requests to the correct model endpoint — useful in systems with multiple AI models (e.g., sentiment, summarization, classification). -

Inference Engine

Executes the model prediction. This can run on GPUs, TPUs, or cloud ML services (like AWS Sagemaker, Azure ML, or Vertex AI). -

Model Registry

Stores model metadata — versions, training datasets, performance metrics — to enable model governance. -

Data Pipeline

Handles data ingestion, preprocessing, and post-processing. AI-ready APIs often need pipelines to clean, tokenize, or vectorize data before inference. -

Monitoring and Feedback Loop

Tracks inference performance, data drift, and feedback signals to retrain or fine-tune models dynamically.

4. Choosing the Right API Style for AI

When building an AI-ready API, choosing the right communication protocol is key.

🔸 REST

Best for synchronous, stateless operations.

Example: Real-time text classification API.

🔸 GraphQL

Ideal when clients need flexible queries, especially with multiple model outputs or nested data.

Example: GraphQL API for querying multimodal embeddings.

🔸 gRPC

Preferred for high-performance inference calls (binary, low-latency).

Example: Streaming AI inference from edge devices.

🔸 WebSockets

Enables bidirectional streaming — crucial for conversational AI, live model feedback, or collaborative AI agents.

Example: AI chat or real-time translation API.

🔸 OpenAI Function Calling / JSON Schemas

When building APIs to interact with LLMs (like GPT-5), structured outputs via schemas or “function calling” endpoints are essential.

5. Step-by-Step: Building an AI-Ready API

Let’s go through a structured approach to building your first AI-ready API.

Step 1: Define the Use Case

Clearly outline what problem your API solves. For instance:

-

Sentiment analysis for reviews

-

Object recognition in images

-

Predictive maintenance in IoT

Each use case determines model type, latency requirements, and data input/output format.

Step 2: Select or Train the Model

You can either:

-

Train a custom ML model using TensorFlow, PyTorch, or scikit-learn.

-

Leverage pre-trained models from Hugging Face, OpenAI, or Anthropic.

-

Host your own model via ONNX or TorchServe.

Example (Python + Hugging Face):

This simple FastAPI endpoint is already “AI-ready” because it integrates inference directly into the API logic.

Step 3: Containerize with Docker

Containerization ensures scalability and consistent deployment.

Deploying this Docker container makes your AI API portable across cloud services like AWS, Azure, or GCP.

Step 4: Add Observability

AI APIs must log more than just requests — they need prediction metrics, model versions, and user context.

Tools:

-

Prometheus/Grafana: Monitor API performance.

-

Evidently AI: Monitor model drift.

-

Weights & Biases: Track training and inference metrics.

Step 5: Secure the API

Security is paramount, especially when handling sensitive or proprietary data.

Implement:

-

API Keys or OAuth2 for authentication.

-

Rate limiting to prevent abuse.

-

Input sanitization for prompt injection protection (critical for LLM APIs).

-

Audit logging for model explainability compliance (GDPR, CCPA).

Step 6: Integrate with MLOps

Your AI API should connect to an MLOps platform that manages:

-

Continuous training (CT) and continuous deployment (CD).

-

Model drift detection.

-

Automated retraining pipelines.

Popular MLOps frameworks:

-

Kubeflow

-

MLflow

-

Seldon Core

-

Vertex AI Pipelines

This ensures your API remains intelligent and up-to-date as data evolves.

6. Making APIs LLM-Ready

Large Language Models (LLMs) like GPT-5, Claude, and Gemini Ultra interact best with APIs that are structured, schema-based, and context-aware.

To make your API LLM-ready:

-

Expose function-calling endpoints:

Use JSON schemas for predictable I/O. -

Include contextual metadata:

Send descriptions, usage instructions, and example outputs. -

Provide few-shot examples:

Enable the LLM to understand how to use your API. -

Add dynamic adaptability:

Let your API auto-select models based on user intent or domain.

Example LLM function schema:

This allows LLMs to “call” your API intelligently.

7. Handling Data and Model Versioning

AI-ready APIs thrive on version control — for both models and datasets.

-

Use Git or DVC for dataset versioning.

-

Store models in registries like MLflow or Weights & Biases.

-

Version your API routes:

/v1/inference,/v2/inference.

Maintain transparency:

-

Log model version in every response.

-

Tag models with training dataset metadata.

-

Include inference timestamps for traceability.

8. Optimizing for Performance and Scalability

AI inference can be resource-heavy. To scale efficiently:

-

Batch inference: Process multiple requests simultaneously.

-

Use GPU inference servers: e.g., NVIDIA Triton, TensorRT.

-

Cache predictions: For repeated queries (e.g., Redis or Memcached).

-

Autoscale containers: With Kubernetes Horizontal Pod Autoscaler (HPA).

-

Asynchronous execution: Utilize async frameworks like FastAPI or aiohttp.

Real-time AI systems (e.g., recommendation engines, voice assistants) require low-latency APIs — often below 100ms.

9. Ensuring Ethical and Explainable AI

AI-ready APIs must incorporate transparency and accountability.

-

Provide explainability endpoints:

/explain→ Returns feature importance or rationale. -

Implement bias detection pipelines.

-

Comply with AI Act, GDPR, and data protection policies.

Explainability fosters trust in enterprise-grade APIs and ensures compliance in regulated industries like healthcare and finance.

10. Real-World Example: AI-Ready API in Action

Let’s look at an example scenario — a Customer Support Intelligence API.

Objective: Automatically summarize support tickets and classify urgency.

Architecture:

-

Frontend → AI API Gateway → Summarization Model + Sentiment Classifier → MLOps Monitoring

Endpoints:

-

/summarize→ Summarizes ticket text using LLM. -

/classify→ Predicts urgency (Low, Medium, High). -

/feedback→ Collects human ratings to fine-tune model.

Benefits:

-

Improved agent response time.

-

Continuous learning from human feedback.

-

Integration-ready for CRMs (e.g., Salesforce, Zendesk).

This is how enterprises are operationalizing AI through APIs that think, adapt, and evolve.

11. Future Trends: Autonomous and Self-Healing APIs

The next generation of AI-ready APIs will feature self-awareness and autonomous adaptability.

Emerging trends:

-

Self-tuning APIs: Auto-optimizing endpoints based on latency and feedback.

-

Persistent memory APIs: Retaining context across sessions.

-

Multi-agent orchestration: APIs that talk to other APIs intelligently.

-

Adaptive routing: AI-driven gateways that reroute to optimal inference nodes.

These innovations point toward a future where APIs become living digital organisms — continuously learning and evolving.

Conclusion

Building an AI-ready API in 2025 means going beyond traditional design — it’s about creating intelligent interfaces that can learn, adapt, and scale.

From model integration and data pipelines to ethical governance and LLM interoperability, every layer of your API stack should be optimized for intelligence.

AI-ready APIs are the backbone of the modern intelligent web — powering chatbots, recommendation systems, autonomous agents, and predictive analytics platforms. The developers who master this craft today will define the architecture of tomorrow’s AI-driven economy.

✅ Key Takeaways

-

Design APIs for learning, not just data exchange.

-

Integrate MLOps to manage model lifecycle and drift.

-

Ensure observability, explainability, and ethics in every call.

-

Use LLM-compatible schemas for intelligent interoperability.

-

Plan for scalability with GPU inference and async frameworks.

For quick updates, follow our whatsapp –https://whatsapp.com/channel/0029VbAabEC11ulGy0ZwRi3j

https://bitsofall.com/https-yourblogdomain-com-microsoft-releases-agent-lightning/

How to Design a Persistent Memory and Personalized Agentic AI System with Decay and Self-Evaluation