Google AI Ships a Model Context Protocol (MCP)

Google has shipped first-class support and practical servers for the Model Context Protocol (MCP), bringing standardized, secure, two-way connections between large language models and real-world data/tools closer to mainstream developer workflows. This move accelerates the “agentic” future — but it also raises new integration, governance, and security questions developers must address. blog.google+1

Introduction — why this matters

Over the past year the conversation around AI has shifted from “bigger models” to “smarter integrations.” Models are no longer just text generators: they are agents that need timely facts, private datasets, business systems, and the ability to call services safely. The Model Context Protocol (MCP) is an open standard designed to make those connections consistent, auditable, and portable across models and vendors. Google’s decision to ship MCP-ready tooling and server implementations pushes that standard from research and demos into practical developer tooling and cloud-native deployments — a key step for the agentic web. Model Context Protocol+1

What Google shipped — the essentials

Google’s recent releases include an official Data Commons MCP Server and first-class support for MCP in parts of its Agent Development Kit and developer tooling. These components let developers expose structured datasets and Google Cloud services through an MCP server so LLMs (including Google’s own Gemini-family models) can request and use context at runtime with standardized messages and schemas. In short: instead of bespoke integrations for every model, developers get a reusable server interface that any MCP-aware model or client can call. blog.google+1

Concretely, Google’s Data Commons MCP Server is positioned as a drop-in for data-driven agent workflows — it maps queries to the Data Commons knowledge graph and returns structured, MCP-compliant context chunks. Google also published examples and SDKs showing how to register tools, implement authentication flows, and surface observability logs for auditability. Those examples are intended to lower the operational friction for enterprises that want controlled agent access to internal data. blog.google+1

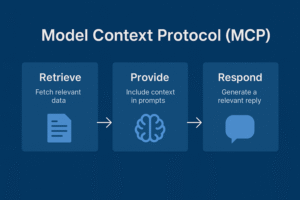

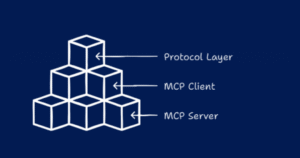

MCP in one line (a practical definition)

Think of MCP like a “USB-C for LLMs”: a standardized, bidirectional protocol that lets models ask for contextual data (documents, database rows, API responses, tool endpoints) and lets servers respond in a structured, discoverable way. MCP adds schema, capability discovery, permissioning hooks, and observability patterns so agents and servers can interoperate without custom glue code. The specification — driven by Anthropic and now broadly supported across the ecosystem — is the contract both sides speak. Model Context Protocol+1

Why Google’s involvement accelerates adoption

There are three practical reasons Google’s move matters:

-

Cloud reach and enterprise credibility. Google Cloud’s footprint and customer base mean MCP servers with cloud-native patterns (IAM, logging, monitoring) will be accessible to many large organizations that previously avoided experimental integrations. That makes MCP viable for regulated and enterprise settings. blog.google+1

-

Tooling and reference implementations. When a major cloud provider ships SDKs, example servers, and integrations (e.g., the Agent Development Kit), the “time to first successful PoC” drops dramatically. Reference implementations reduce mistakes that appear when teams reimplement protocol details. Google GitHub

-

Model support and ecosystem effects. MCP becomes more useful if both clients (LLM hosts) and servers (data/tool providers) agree on the semantics. Google’s SDKs are geared to work with Gemini and other LLMs in agentic flows, which helps establish MCP as a cross-model standard rather than an Anthropic-only ecosystem. Google GitHub

How MCP changes architecture (a developer’s view)

Before MCP, teams typically built custom adapters that transform a model’s text-based intent into API calls or database queries. Those adapters are brittle: they require maintenance for each new model, differ across teams, and often lack solid audit trails.

With MCP:

-

Discovery: Agents can query an MCP server for available tools, schemas, and permissions instead of assuming endpoints exist.

-

Structured context: Responses are typed and chunked so the model receives predictable structures rather than opaque blobs.

-

Secure flows: The protocol defines where and how to insert auth checks and scopes, which simplifies least-privilege deployments.

-

Observability: Standardized logging hooks make it easier to audit what data models requested and why.

Put simply, MCP separates “what the model needs” from “how the backend provides it,” enabling a modular, reusable architecture for agentic apps. Model Context Protocol+1

Example use cases (real and near-term)

-

Enterprise knowledge assistants: An internal MCP server exposes HR policies, product docs, and changelogs. A Gemini-powered assistant queries the MCP server for the latest SLA paragraph and cites it in an answer — with an auditable trail showing what was used. Google’s Data Commons MCP Server demonstrates similar patterns for open datasets. blog.google

-

Developer productivity tools: An IDE extension can expose current repository state, test results, and CI logs through an MCP interface. An LLM agent uses that context to suggest fixes or write PR summaries, with consistent semantics across repos and platforms. Chrome DevTools integrations and community servers show this is already in motion. Chrome for Developers+1

-

Public data and policy tools: NGOs or public agencies can expose curated datasets (e.g., Data Commons) as MCP servers to enable model-driven policy analysis or automated briefings. Google’s blog highlights such data-driven agents already being used for advocacy and policy workflows. blog.google

-

Secure tooling for security operations: MCP servers can wrap SIEMs and threat intelligence in well-scoped endpoints so models help triage incidents without direct, unlimited access to raw logs. Google’s own MCP security repos illustrate these enterprise scenarios. GitHub

The security and governance angle — benefits and risks

MCP is attractive precisely because it centralizes control — but that centralization is a double-edged sword.

Benefits:

-

Standardized permissioning and scopes make least-privilege enforcement more straightforward.

-

Logging and schema validation help build audit trails that show why a model used a specific data source.

-

Vendor and community reference implementations encourage best practices (auth flows, token rotation, validation). Model Context Protocol+1

Risks and caveats:

-

Supply-chain risks: MCP servers, like any middleware, can be a new attack surface. Recent research has shown malicious MCP servers can exfiltrate data if unchecked — highlighting the need for signed releases, dependency vetting, and runtime integrity checks. Developers must treat MCP server code with the same rigor as any other critical infrastructure. IT Pro

-

Over-permissioning: If an MCP server grants broad access to sensitive datasets, an agent could misuse capabilities — intentionally or through prompt exploitation. Fine-grained scoping, just-in-time approvals, and human-in-the-loop gating remain essential.

-

Privacy and compliance: Exposing regulated data via MCP requires mapping protocol controls to legal controls (e.g., data residency, anonymization, retention policies). The protocol provides hooks but organizations must implement compliant policies.

Google’s MCP tooling explicitly attempts to address these concerns by integrating with Cloud IAM, observability, and recommended deployment patterns — but operational discipline remains the stronger factor than tooling alone. blog.google+1

Interoperability and standards politics

A protocol only wins if many parties adopt it. MCP began as an Anthropic initiative and quickly attracted contributions, implementations, and spec work from an ecosystem of vendors, open-source projects, and cloud providers. Google’s support helps reduce fragmentation risk: developers can expect MCP servers and clients that speak the same language regardless of whether the LLM is hosted by Anthropic, Google, or others. Anthropic+1

That said, history shows standards compete: extensions, competing schemas, or proprietary hooks could fragment the landscape. The healthy pattern to watch for is an open spec with vendor-maintained reference servers and versioned compatibility guarantees — something the modelcontextprotocol community and GitHub repos are already attempting to coordinate. GitHub+1

What this means for product managers and engineering leaders

If you’re building AI features this year, consider MCP in your tech strategy checklist:

-

Proof of concept: Build a minimal MCP server that exposes a narrow dataset or single tool (search, calendar, CI logs). Use Google’s Data Commons examples and ADK docs to accelerate onboarding. blog.google+1

-

Threat modeling: Include MCP servers in your attack surface analysis. Validate dependencies, require signed releases, and build observability to detect unusual requests. The malicious MCP incident is a reminder that malicious or compromised servers can silently exfiltrate data. IT Pro

-

Permission model: Design your MCP scopes carefully. Prefer narrow endpoints for high-sensitivity operations and require human approvals for actions with business impact.

-

Privacy & compliance mapping: Translate privacy regulations to MCP controls (e.g., for EU-based datasets, enforce data residency at the server layer and mask PII in responses).

-

Versioning plan: Follow the MCP spec versions and test clients/servers against compatibility matrices. Community repos and the specification site can help you catch breaking changes. Model Context Protocol+1

Ecosystem winners and losers — a quick take

Winners likely include:

-

Cloud providers that make secure, managed MCP servers easy to deploy (Google, others).

-

Tooling vendors (IDEs, observability providers, security platforms) that produce MCP connectors.

-

Organizations that standardize early — they’ll enjoy faster model migration and lower integration cost.

Potential losers or laggards:

-

Teams with heavy bespoke integrations that do not invest in standardization; maintaining many adapters will become a recurring drag.

-

Vendors that lock MCP extensions into proprietary ecosystems without clear backward compatibility — this risks fragmentation and limits adoption.

Looking ahead — what to expect next

-

More managed MCP offerings. Expect cloud providers to ship managed MCP endpoints, secrets integrations, and marketplace connectors for common SaaS tools. Google’s Data Commons server is an early data-focused example of this trend. blog.google

-

Specialized MCP servers. Repositories already show niche servers for security operations, databases, and developer tooling. We’ll see verticalized MCP servers that encapsulate domain logic and compliance constraints. GitHub+1

-

Standardized governance patterns. As incidents and audits accumulate, the community will converge on patterns for signing server code, runtime attestation, and standard audit formats for MCP traffic. The ecosystem challenge is to make these patterns easy to adopt. IT Pro

-

Protocol extensions (carefully). Expect optional extensions for payments, agent-to-agent workflows, and richer capability negotiation. Here again, community governance will determine whether extensions remain optional, standardized, or platform-locked. Google Cloud

Conclusion — pragmatic optimism

Google shipping MCP tooling is a significant moment in the move from isolated LLM demos to production-grade, agentic applications. The protocol’s promise — standardized discovery, structured context, and the potential for auditable, secure integrations — addresses real engineering pain points. But the practical value depends on three things developers must focus on now: disciplined security and supply-chain controls, careful permission design, and active participation in the evolving spec and community.

MCP doesn’t magically make models safe or correct; it provides a better way to connect models to the world. With responsible implementation — and attention to the kinds of real-world incidents already visible in the ecosystem — MCP can be the plumbing that enables powerful, reliable agentic products rather than yet another brittle integration pattern. Google’s shipping of MCP components nudges the industry toward that future; whether it becomes the dominant standard will depend on community governance, interoperability, and how well vendors and customers harden the operational side. blog.google+2Model Context Protocol+2

Sources (selected)

-

Google Developers — Data Commons MCP Server announcement and examples. blog.google

-

Google Agent Development Kit (ADK) MCP documentation. Google GitHub

-

Model Context Protocol specification and central site. Model Context Protocol+1

-

Anthropic — original Model Context Protocol announcement. Anthropic

-

Reporting on a malicious MCP server and supply-chain security issues. IT Pro

For quick updates, follow our whatsapp –https://whatsapp.com/channel/0029VbAabEC11ulGy0ZwRi3j

Efficient Optical Computing: The Future of High-Speed, Low-Power Processing

Google AI Research Introduces a Novel Machine Learning Approach