TinyML for Edge Devices: Unlocking Intelligence at the Edge

Introduction

The world of artificial intelligence (AI) is rapidly expanding beyond data centers and cloud platforms into smaller, resource-constrained environments. One of the most transformative innovations in this journey is Tiny Machine Learning (TinyML). By enabling machine learning models to run on low-power, memory-limited edge devices such as microcontrollers, sensors, and wearables, TinyML is redefining how we interact with technology.

In today’s data-driven world, sending every bit of information to the cloud is not always feasible. Latency, connectivity, privacy, and power consumption all pose challenges. TinyML bridges this gap by bringing AI to the edge, where data is generated. From smart home assistants and industrial IoT sensors to medical wearables and agricultural drones, TinyML is powering intelligent decisions locally, in real time.

This article explores what TinyML is, why it matters, its architecture, use cases, benefits, challenges, and the future of edge intelligence.

What is TinyML?

TinyML refers to the deployment of machine learning algorithms on ultra-low-power microcontrollers and other resource-constrained devices. Unlike traditional ML systems that rely on powerful CPUs, GPUs, or cloud servers, TinyML models are optimized to consume minimal energy (often in the milliwatt range) while still providing useful AI capabilities.

Key characteristics of TinyML:

-

Runs on devices with as little as tens of kilobytes of memory.

-

Consumes extremely low power, enabling devices to run for months or years on batteries.

-

Performs on-device inference without relying on cloud connectivity.

-

Designed for real-time processing with minimal latency.

TinyML is not just about shrinking ML models; it’s about enabling intelligence in places previously thought impossible.

Why TinyML for Edge Devices?

Edge devices—sensors, wearables, cameras, and IoT endpoints—are the frontline of data collection. Running AI directly on them brings several advantages:

1. Low Latency

Decisions can be made in microseconds without waiting for cloud communication. This is vital for applications like autonomous drones or medical monitoring.

2. Reduced Bandwidth Usage

Instead of transmitting raw sensor data, only processed insights are sent, reducing data transfer costs and network congestion.

3. Energy Efficiency

TinyML allows devices to run AI workloads with minimal power, extending battery life significantly.

4. Data Privacy and Security

Sensitive data (like voice commands or health signals) never leaves the device, reducing privacy risks.

5. Scalability

Deploying AI directly on edge devices eliminates dependence on centralized infrastructure, allowing massive IoT networks to scale efficiently.

Core Components of TinyML

To understand how TinyML works, let’s break down its ecosystem:

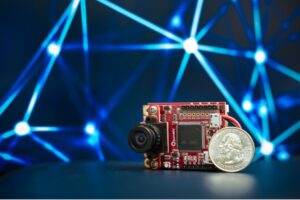

1. Hardware

TinyML runs on microcontrollers (MCUs) and specialized chips designed for efficiency. Popular hardware platforms include:

-

ARM Cortex-M series

-

Arduino boards (Arduino Nano 33 BLE Sense)

-

Espressif ESP32

-

NVIDIA Jetson Nano (for slightly larger edge AI)

-

Google Coral Edge TPU

2. Frameworks & Tools

Developers rely on lightweight frameworks to train and deploy models:

-

TensorFlow Lite for Microcontrollers (TFLM)

-

Edge Impulse (end-to-end ML pipeline for embedded devices)

-

uTensor

-

MicroTVM

3. Models

ML models must be compressed and quantized to run efficiently on tiny devices. Techniques include:

-

Quantization (reducing precision from 32-bit to 8-bit or lower)

-

Pruning (removing unnecessary model parameters)

-

Knowledge Distillation (training smaller models using larger ones)

4. Deployment

Models are converted into efficient binaries and flashed directly onto microcontrollers, enabling them to run locally.

Applications of TinyML for Edge Devices

TinyML is already powering real-world innovations across industries:

1. Healthcare & Wearables

-

Continuous monitoring of heart rate, ECG, or oxygen levels on smartwatches.

-

Detecting early signs of diseases from biosignals.

-

Smart hearing aids with noise cancellation.

2. Smart Homes

-

Voice-controlled assistants running locally without cloud dependency.

-

Energy-efficient HVAC systems with predictive intelligence.

-

Gesture recognition for touchless control.

3. Agriculture

-

Edge sensors detecting soil moisture, crop diseases, and pest infestations.

-

Drones equipped with TinyML analyzing plant health in real time.

4. Industrial IoT (IIoT)

-

Predictive maintenance for machines by detecting anomalies in vibration or sound.

-

Worker safety systems detecting hazardous conditions.

-

Edge cameras for quality inspection in manufacturing.

5. Environmental Monitoring

-

Air quality and pollution detection using battery-powered sensors.

-

Wildlife monitoring with energy-efficient edge devices in remote areas.

6. Consumer Electronics

-

Smart earbuds with always-on wake-word detection.

-

Low-power cameras for motion recognition.

-

Toys and robots that interact intelligently.

Benefits of TinyML for Edge Devices

TinyML is not just an incremental improvement—it’s a paradigm shift. Some key benefits include:

-

Extended device lifespan: Devices can function for months or years without battery replacement.

-

Cost efficiency: Lower energy and bandwidth requirements reduce operational expenses.

-

Resilience: Devices can continue operating even in areas with poor connectivity.

-

Personalization: AI models can adapt to individual user behavior locally.

-

Environmental impact: Reduced cloud processing lowers carbon footprint.

Challenges of TinyML

While promising, TinyML is still evolving and faces challenges:

-

Model Optimization

Shrinking ML models while maintaining accuracy is difficult. Over-compression can degrade performance. -

Hardware Constraints

Limited memory, processing power, and storage restrict the complexity of models. -

Development Complexity

Building ML for microcontrollers requires specialized skills, unlike traditional ML development. -

Security Concerns

Edge devices can be physically accessed, increasing risks of tampering. -

Standardization

The ecosystem is fragmented, with multiple hardware and software platforms lacking universal standards.

TinyML vs Cloud-Based AI

| Feature | TinyML (Edge) | Cloud-Based AI |

|---|---|---|

| Latency | Ultra-low (real-time decisions) | High (depends on connectivity) |

| Power Consumption | Very low (battery-friendly) | High (servers consume more) |

| Data Privacy | High (local processing) | Moderate (data sent to servers) |

| Scalability | Easy to deploy across devices | Limited by infrastructure costs |

| Model Size | Tiny, optimized models | Large, complex deep learning |

Case Studies

1. Google’s Keyword Spotting

Google developed ultra-lightweight models for wake-word detection (“Hey Google”) that run on microcontrollers, consuming only a few milliwatts.

2. Smart Agriculture in Africa

TinyML-powered sensors monitor soil and weather conditions in rural farms, helping farmers optimize irrigation and increase crop yields.

3. Healthcare Monitoring

Wearables like Fitbit integrate TinyML for real-time health monitoring, enabling users to detect anomalies without constant cloud uploads.

Future of TinyML and Edge AI

The future of TinyML looks promising, with trends shaping its adoption:

-

Integration with 5G and Edge Networks

TinyML will complement low-latency 5G connectivity, enabling hybrid cloud-edge AI. -

Hardware Advancements

New MCUs and AI accelerators (like ARM Ethos-U, Syntiant, and GreenWaves GAP9) are emerging, boosting efficiency. -

Federated Learning

TinyML devices will collaboratively train models without sharing raw data, enhancing personalization and privacy. -

Sustainability

TinyML can drastically reduce energy consumption in IoT ecosystems, making technology more eco-friendly. -

Democratization of AI

Platforms like Edge Impulse and Arduino are lowering barriers, allowing even non-experts to build edge AI applications.

Conclusion

TinyML is ushering in a new era of intelligence at the edge. By enabling low-power, on-device AI, it overcomes the limitations of cloud-based models and opens possibilities for billions of devices worldwide. Whether it’s a smartwatch predicting health issues, a drone monitoring crops, or a sensor detecting machine faults, TinyML empowers devices to think locally and act instantly.

As hardware, frameworks, and algorithms evolve, TinyML will continue to grow, bringing AI everywhere—affordable, scalable, and sustainable. The convergence of TinyML and edge computing is not just a trend; it’s the foundation for the next wave of AI innovation.

https://bitsofall.com/latest-news-in-ecommerce-ai-revolutionizing-indias-retail-landscape/

https://bitsofall.com/https-yourwebsite-com-apples-lead-ai-researcher-for-robotics-heads-to-meta/

Sophisticated Creative Tools: Transforming the Future of Innovation, Design, and Productivity

AI Data Centers Near Tax Break With $165 Billion of Phantom Debt