Negative Training Data: Challenges, Applications, and Future Directions

Introduction

In the age of machine learning (ML) and artificial intelligence (AI), data is considered the new oil. The quality, diversity, and volume of training data determine how well a model performs in real-world scenarios. Most discussions in AI focus on positive training data—datasets curated with correct, high-quality, and relevant examples that help a model learn desired behavior. However, equally important, and often overlooked, is the concept of negative training data.

Negative training data refers to examples that represent incorrect, undesirable, or irrelevant information used intentionally in the training process to help models distinguish between “right” and “wrong.” These datasets don’t just boost accuracy—they enable models to generalize better, avoid bias, and resist adversarial manipulation. From natural language processing (NLP) to computer vision, negative training data plays a vital role in shaping robust and reliable AI systems.

This article explores what negative training data is, why it matters, its challenges, practical applications, and where the future is heading.

What is Negative Training Data?

Negative training data is data that shows what a model should not learn or predict. Unlike positive examples, which reinforce correct outcomes, negative data represents wrong answers, irrelevant inputs, or counterexamples.

For instance:

-

In spam detection, negative data could be legitimate emails that are not spam.

-

In image recognition, it could include pictures of objects that are not cats when training a “cat vs. not-cat” model.

-

In sentiment analysis, negative training data could consist of neutral or sarcastic text that prevents the model from misclassifying it as positive or negative sentiment.

Essentially, negative training data teaches AI models to say: “This is not what I am looking for.”

Why Negative Training Data Matters

1. Improves Model Generalization

A model trained only on positive examples may overfit, meaning it performs well on training data but poorly on new data. Negative data introduces contrast, helping the system generalize to unseen scenarios.

2. Reduces False Positives

In domains like fraud detection, cybersecurity, or medical diagnosis, false positives can be costly. Negative training data helps the system learn distinctions, minimizing errors where harmless cases are flagged as threats.

3. Enhances Robustness Against Bias

Negative data can balance skewed datasets. For example, if an image dataset for face recognition contains mostly young faces, adding negative data of older or diverse faces prevents age or racial bias.

4. Critical for Adversarial Defense

Attackers often try to fool AI with adversarial inputs. Negative training data, when carefully designed, strengthens models against such manipulation by showing them what “bad inputs” look like.

5. Ethical and Safe AI Deployment

For AI systems in healthcare, education, or autonomous driving, mistakes can be harmful. Negative examples ensure models avoid unsafe predictions and align more closely with ethical standards.

Sources of Negative Training Data

Negative training data can come from multiple sources, depending on the domain and use case:

-

Natural Negative Examples – Naturally occurring irrelevant or opposite data points, such as pictures of dogs in a “cat recognition” dataset.

-

Synthetic Negative Data – Artificially generated examples created through simulations, adversarial attacks, or generative models.

-

Counterfactual Data – Modified versions of positive data with changes that make them incorrect. For example, changing “The sky is blue” to “The sky is green” in a text dataset.

-

Human-Labelled Negatives – Instances manually identified by experts as incorrect or misleading.

-

Noise Injection – Random corruptions added intentionally to train robustness.

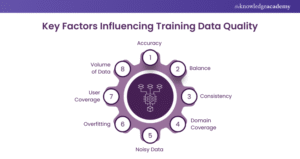

Challenges of Negative Training Data

While negative training data is essential, working with it is not without difficulties.

1. Defining What Counts as Negative

In many cases, it’s unclear what should be labeled as negative. For example, sarcasm in sentiment analysis can be both negative and neutral, depending on interpretation.

2. Data Imbalance

Datasets often have fewer negative examples compared to positive ones, leading to imbalance and skewed training.

3. Annotation Complexity

Labeling negative data is more challenging than labeling positive data. It requires domain knowledge and sometimes subjective judgment.

4. Risk of Over-Penalization

Too much emphasis on negative examples can lead to underfitting, where the model becomes too cautious and fails to recognize legitimate patterns.

5. Ethical Concerns

In sensitive areas like mental health or law enforcement, negative training data may unintentionally reinforce stereotypes or harmful patterns if not curated responsibly.

Applications of Negative Training Data

1. Natural Language Processing (NLP)

-

Spam and Hate Speech Detection: Models use negative data (harmless or neutral text) to avoid false alarms.

-

Machine Translation: Negative examples prevent mistranslations by contrasting correct and incorrect outputs.

-

Chatbots & Conversational AI: Negative training ensures bots avoid toxic, biased, or nonsensical replies.

2. Computer Vision

-

Object Recognition: Identifying “not-target” images ensures systems don’t confuse cats with dogs, or pedestrians with traffic signs.

-

Medical Imaging: Negative scans help avoid misdiagnosis, e.g., distinguishing cancerous from non-cancerous tissue.

3. Cybersecurity

-

Intrusion Detection: Negative data includes benign network activity to reduce false alarms.

-

Malware Classification: Helps systems distinguish between real threats and harmless files.

4. Healthcare AI

-

Diagnostic Models: Negative examples ensure a system doesn’t over-diagnose healthy patients.

-

Drug Discovery: Negative molecular data helps AI eliminate ineffective drug candidates.

5. Autonomous Systems

-

Self-Driving Cars: Negative examples include irrelevant road objects like shadows or harmless debris, ensuring safe navigation.

-

Robotics: Teaches robots to avoid incorrect actions (e.g., dropping fragile objects).

Case Studies

Case 1: Google’s Spam Filter

Google’s Gmail spam filter relies heavily on negative training data. Legitimate emails act as negative examples to ensure the filter doesn’t mistakenly classify important work messages as spam.

Case 2: Medical AI for Skin Cancer Detection

A study found that models trained only on positive images of malignant melanoma often misclassified harmless moles as cancerous. By adding negative examples of benign skin conditions, accuracy improved significantly.

Case 3: Adversarial Image Defense

MIT researchers demonstrated that models trained with adversarially generated negative images resisted fooling attempts far better than models without such data.

Best Practices for Using Negative Training Data

-

Balance Positive and Negative Data – Avoid imbalance that skews model predictions.

-

Diversity in Negative Data – Include varied negative examples to enhance robustness.

-

Use Human-in-the-Loop Systems – Involve domain experts to label complex negative examples.

-

Monitor Model Performance Regularly – Evaluate false positives and negatives continuously.

-

Ethical Curation – Ensure negative data doesn’t reinforce harmful biases or stereotypes.

Future Directions

The role of negative training data is expected to expand as AI becomes more integrated into society. Emerging areas include:

-

Automated Negative Data Generation: Using generative AI to create realistic, diverse counterexamples.

-

Explainable Negatives: Designing systems that not only reject inputs but also explain why they are negative.

-

Bias-Aware Negatives: Curating datasets that specifically tackle fairness and ethical concerns.

-

Self-Supervised Learning: Leveraging unlabeled data where models learn positive and negative associations automatically.

-

Adversarial AI: Using negative training data to build resilience against increasingly sophisticated attacks.

Conclusion

Negative training data may not be as glamorous as positive examples, but it is equally important for building reliable, fair, and safe AI. By teaching models not just what to do but also what to avoid, negative data ensures better generalization, robustness, and ethical alignment.

As AI continues to shape critical sectors like healthcare, cybersecurity, and transportation, the importance of negative training data will only grow. The future of trustworthy AI depends not only on the data we feed it but also on the mistakes we prevent it from learning.

Ethical AI & Regulations: Balancing Innovation with Responsibility

Robust AI Models: Building Reliability in the Age of Artificial Intelligence