Robust AI Models: Building Reliability in the Age of Artificial Intelligence

Artificial Intelligence (AI) is rapidly transforming industries, from healthcare to finance, manufacturing to transportation. However, while the capabilities of AI have grown significantly, one of the most pressing challenges remains ensuring that these models are robust. Robust AI models are designed to perform reliably in real-world environments, withstand adversarial conditions, adapt to uncertainty, and maintain fairness and security.

In this article, we’ll explore what robust AI models are, why they matter, the challenges in building them, approaches to achieve robustness, and their implications for the future of AI.

What Are Robust AI Models?

In simple terms, robustness in AI refers to the ability of a model to maintain its performance when faced with unexpected data, environmental changes, or deliberate manipulations. While many models perform well on carefully curated training and test sets, their performance often deteriorates in real-world scenarios.

A robust AI model is not just accurate under ideal conditions but remains effective when conditions change. For example:

-

A facial recognition system that works under different lighting conditions and camera angles.

-

A self-driving car that can recognize pedestrians in rain, fog, or snow.

-

A medical AI tool that diagnoses diseases correctly even when patient data is incomplete or slightly noisy.

Robustness ensures trust, reliability, and safety—qualities that are critical when deploying AI in high-stakes environments.

Why Robustness in AI Matters

The importance of robustness goes beyond just technical performance. Let’s break down why it is essential:

1. Safety and Reliability

In safety-critical systems, such as autonomous vehicles or medical diagnostics, even small model errors can have catastrophic consequences. Robustness ensures that the system remains reliable despite unforeseen circumstances.

2. Security Against Adversarial Attacks

AI systems can be tricked by adversarial inputs—slightly modified data crafted to fool the model. For instance, a small sticker on a stop sign might cause a self-driving car’s vision system to misclassify it. Robust AI models need defenses against such attacks.

3. Generalization Beyond Training Data

Real-world data rarely matches training datasets perfectly. Robust AI models can generalize well, adapting to variations in input data, domains, and contexts.

4. Fairness and Bias Mitigation

Robustness also extends to ethical considerations. A model must perform consistently across demographic groups without being biased against certain populations.

5. Trust and Adoption

For industries and consumers to fully trust AI, models must demonstrate resilience, consistency, and reliability in diverse scenarios.

Challenges in Building Robust AI Models

Despite its importance, robustness is not easy to achieve. Several challenges exist:

-

Adversarial Vulnerabilities

Deep learning models, in particular, are highly vulnerable to adversarial attacks. Tiny, imperceptible perturbations in images, audio, or text can lead to incorrect predictions. -

Data Distribution Shift

Models trained on one dataset often struggle when deployed in different environments. This shift in data distribution—called “domain shift”—is a common challenge. -

Limited Training Data

Real-world robustness requires massive, diverse datasets. However, gathering balanced and representative data across all conditions is difficult. -

Overfitting to Training Conditions

AI models often memorize training patterns rather than learning generalizable features, leading to brittleness when faced with new data. -

Complexity of Real-World Environments

Environments like healthcare or autonomous driving are too complex to simulate perfectly. This makes robustness testing challenging.

Approaches to Building Robust AI Models

Researchers and practitioners have developed multiple strategies to improve robustness. Below are some of the most effective:

1. Adversarial Training

This involves deliberately introducing adversarial examples during training, forcing the model to learn resilience against such manipulations. While effective, it can be computationally expensive.

2. Data Augmentation

By enriching training data with variations (rotations, lighting changes, noise, paraphrasing in text), models become more adaptable to real-world conditions.

3. Regularization Techniques

Regularization methods like dropout, weight decay, or early stopping reduce overfitting, making models more generalizable.

4. Robust Architectures

Certain neural network architectures, such as convolutional neural networks (CNNs) with defensive layers or Bayesian models, are inherently more robust against noise and uncertainty.

5. Uncertainty Estimation

Incorporating probabilistic approaches allows AI models to measure their own confidence in predictions. If uncertainty is high, human oversight can be introduced.

6. Explainable AI (XAI)

Robustness is not just about predictions but also understanding why a model made a decision. Explainable AI enhances transparency and trust, making it easier to identify when models fail.

7. Transfer Learning and Domain Adaptation

Using pre-trained models and adapting them to new environments helps reduce the impact of domain shift.

8. Robust Evaluation Metrics

Traditional accuracy metrics often fail to capture robustness. New benchmarks focus on adversarial accuracy, out-of-distribution (OOD) performance, and fairness indicators.

Case Studies of Robust AI in Action

1. Healthcare

Google’s AI system for diabetic retinopathy detection was initially accurate but failed in different clinical settings due to lighting variations in retinal images. Improvements came through diverse data augmentation and robust training.

2. Autonomous Vehicles

Tesla and Waymo have invested heavily in robustness testing under extreme conditions—snow, rain, night driving—to ensure safety in unpredictable real-world environments.

3. Finance

AI models detecting fraud face adversarial challenges as fraudsters continuously adapt. Robust AI models use ensemble learning and anomaly detection to resist manipulation.

4. Cybersecurity

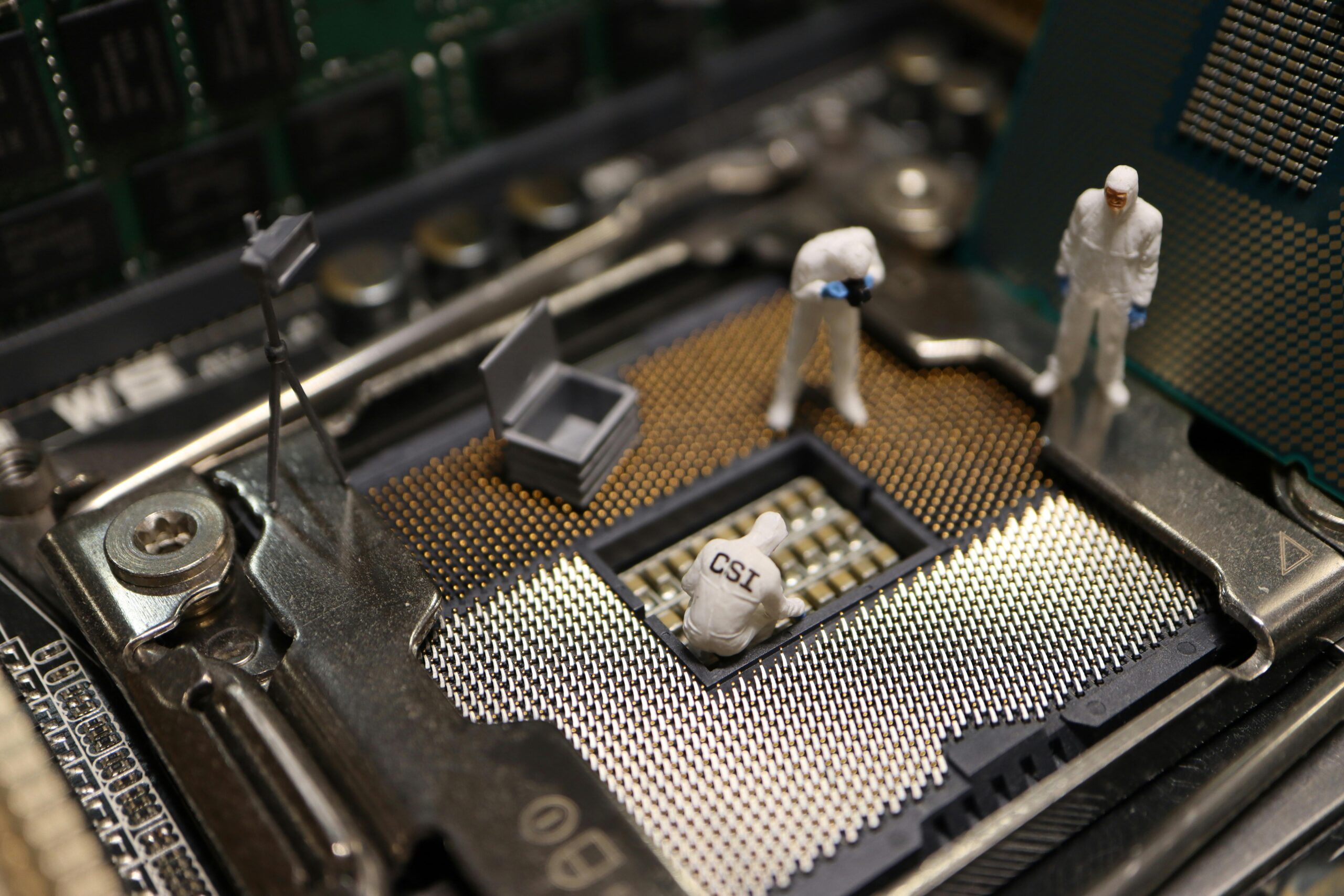

Intrusion detection systems powered by AI are prime targets for adversarial attacks. Robustness here means detecting even stealthy, adversarial intrusions while avoiding false positives.

Measuring Robustness: Key Metrics

Developers and researchers use various benchmarks to measure how robust an AI model is:

-

Adversarial Accuracy: Performance under adversarial inputs.

-

Out-of-Distribution (OOD) Detection: Ability to recognize when data doesn’t match training distribution.

-

Fairness Metrics: Consistent performance across demographic groups.

-

Calibration: Alignment between predicted confidence and actual accuracy.

-

Robustness Scorecards: Holistic evaluation tools that combine multiple robustness measures.

The Future of Robust AI Models

Robustness is increasingly becoming a non-negotiable requirement for AI deployment. Looking ahead, several trends are shaping this field:

-

Hybrid Human-AI Systems

Instead of fully autonomous AI, many future systems will use human-in-the-loop designs, leveraging AI’s speed with human oversight for robustness. -

Self-Learning Models

AI models that can learn continuously from real-world interactions will adapt better to distribution shifts. -

Formal Verification of AI

Borrowing from software engineering, formal verification techniques are being applied to mathematically prove model robustness under specific conditions. -

Ethical Robustness

Beyond technical resilience, AI models must also be robust against ethical failures—avoiding bias, misinformation, and harmful decisions. -

Standardized Benchmarks

Global efforts are underway to create standardized robustness benchmarks, ensuring accountability and trust in AI systems.

Balancing Robustness with Efficiency

One challenge in developing robust models is the trade-off with efficiency. Adversarial training, uncertainty estimation, and ensemble methods often require more computation, larger datasets, and longer training times. The future lies in finding innovative techniques that provide both robustness and efficiency without compromising performance.

Conclusion

As AI continues to permeate every aspect of human life, ensuring that models are robust is essential for safety, fairness, and trust. A robust AI model does not crumble under adversarial pressure, does not discriminate across populations, and does not fail in unfamiliar conditions.

The journey to robust AI models is ongoing, with researchers exploring adversarial defenses, data augmentation, explainability, and uncertainty estimation. The ultimate goal is to create AI systems that are not only intelligent but also resilient, reliable, and responsible.

In a world where AI decisions increasingly influence healthcare outcomes, financial transactions, transportation safety, and personal freedoms, robustness is not just a technical requirement—it is a moral imperative.

https://bitsofall.com/https-yourblog-com-data-centric-ai-shifting-the-focus-from-models-to-data/

Climate and Eco-Driving AI: A Sustainable Path to Greener Mobility