Google launching its Veo GenAI video model — what it is, why it matters, and how creators will use it

Meta note for Bits of us: below is a 2,000-word, SEO-friendly feature article you can publish as-is (H1 included). It explains what Google’s Veo family (now up to Veo 3) does, how it works, where you can use it, practical use cases, limitations, safety measures, and what it means for creators and businesses.

A new era for video: meet Veo

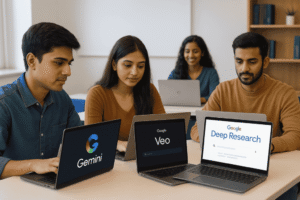

Google’s Veo is a family of generative-video models that turns text (and images) into short, high-quality video clips — now with native audio generation — and the latest release, Veo 3, represents a significant leap in realism, prompt adherence, and multimodal control. With Veo 3 Google promises cinema-grade visuals, coherent physics and motion, and built-in audio (sound effects, ambient noise and dialogue) so that creators can generate short clips without assembling separate audio tracks. Veo lives across Google’s AI surface: Vertex AI for enterprises, the Gemini API and Google AI Studio for developers, and consumer access through Gemini and Flow experiences. Google DeepMindGoogle CloudGemini

Why Veo matters — more than just another “text-to-video” model

Text-to-video models have been evolving rapidly, but Veo 3 advances three practical pain points:

-

Integrated audio — unlike many earlier models that produced silent visuals requiring external sound design, Veo 3 generates synchronized audio (dialogue, Foley, ambience) alongside video. That reduces post-production steps dramatically. Google DeepMindGoogle AI for Developers

-

Prompt fidelity and physics — Google has invested heavily in ensuring objects behave believably (lighting, gravity, motion continuity) and that outputs follow the user’s instructions closely, which is essential for trustable prototyping and cinematic outputs. Google Cloudblog.google

-

Ecosystem access — Veo runs where creators already work: Vertex AI (enterprise/scale), Gemini/Gemini API and Google AI Studio (developers and creators), and directly in Google’s AI apps for subscribers. That lowers friction for both experimentation and production. Google CloudGeminiGoogle Developers Blog

Those three features make Veo not just an experimental toy but a practical tool for marketers, indie filmmakers, educators, and app developers — especially for rapid concepting and short-form content that dominates social platforms.

What Veo can (and can’t) do today

Capabilities

-

Generate short, photoreal or stylized video clips from text or image prompts (Veo 3 focuses on ~8-second clips in some consumer surfaces, with higher fidelity options on Vertex AI). GeminiGoogle AI for Developers

-

Produce native audio (dialogue, sound effects, ambient audio) that matches the visuals. Google DeepMind

-

Offer image-to-video conversion (turn a photo into a dynamic short clip) via Google AI Studio and Gemini. blog.google

-

Provide API access for integration into apps, plus controls for aspect ratio, duration, and style through the Gemini API and Vertex AI. Google Cloud+1

Limitations (important to know)

-

Duration and complexity: many consumer surfaces currently optimize for short clips (around 8 seconds is commonly mentioned), so Veo isn’t (yet) a drop-in replacement for full-length, multi-scene filmmaking. GeminiGoogle AI for Developers

-

Human fidelity: while scenes and creatures can look impressive, fine details in sustained human expressions and complex crowd dynamics sometimes show artifacts (faces, hand motion issues) — a known challenge in generative video. Recent reviews find Veo 3 strong in atmosphere and cinematic shots but still imperfect with detailed human interaction. Tom’s GuideTechRadar

-

Computational cost and access tiers: higher fidelity or enterprise use will typically be available via paid tiers on Vertex AI or the Gemini API; consumer access is generally gated by subscription plans. Google Developers BlogGemini

Behind the curtain: how Veo works (at a high level)

Google’s public materials and developer docs explain Veo as a multimodal generative model trained to map text and image prompts into coherent sequences of frames — and crucially, into audio tracks that align with motion. It borrows ideas from diffusion and transformer architectures tailored to spatio-temporal generation, and benefits from Google’s investments in multimodal instruction-tuned models (Gemini lineage) plus the infrastructure integration of Vertex AI. For developers, Google provides SDKs and example code to call the Gemini API and Vertex AI endpoints, with model cards and safety filters applied server-side. Google Cloud+1Google AI for Developers

Practical use cases — who benefits most?

-

Marketing and short ads: rapid prototyping of short ads and social creatives without a full shoot. Brands can iterate concepts at low cost. Google Cloud

-

Content creators & social media: creators can produce viral-ready short clips (memes, stylized scenes, quick skits) faster, fueling the rise of “AI-first” content trends. Recent reporting shows this style of content already proliferating across platforms. The Washington PostTechRadar

-

Previsualization for filmmakers: directors and VFX teams can quickly mock up shot ideas during development to test mood, framing and pacing before committing to a full production. blog.google

-

Education & e-learning: educators can create short illustrative clips (historical reenactments, science demos) without expensive production. Google Cloud

-

Enterprise automation: businesses can generate product demos, animated explainers and training clips at scale using Vertex AI and the Gemini API. Google Cloud

Ethics, safety and watermarking — Google’s mitigation steps

Generative video raises obvious concerns: misinformation, deepfakes, copyright infringement, and harmful content. Google acknowledges these risks and applies several mitigations:

-

SynthID watermarking: videos produced by Veo models include digital SynthID watermarks to mark content as AI-generated, which can help downstream platforms and researchers detect synthetic media. Google Developers Blog

-

Policy filters and safety models: Google applies content policy enforcement in the API and user interfaces to block or rate-limit requests for violent, sexual, or disallowed content. Google Cloud

-

Access controls and usage policies: enterprise and developer terms restrict misuse; Google’s docs emphasize responsible use and provide model cards and guidance. Google Cloud+1

These steps are important but not foolproof. Watermarks can be removed with enough effort, and policy filters may be bypassed by adversarial prompts. That means platform-level content moderation and verification practices will need to evolve alongside models like Veo.

Pricing and availability

Google has rolled Veo across multiple access tiers: Vertex AI for enterprise customers, the Gemini API and Google AI Studio for developers, and limited consumer access through Google AI Pro/Ultra subscriptions and the Gemini app/Flow experience. Higher-fidelity or production use is generally behind paid tiers or paid preview programs, while some trial or low-volume access may be available to free users via Google AI Studio or promotional previews. Check the Gemini API and Vertex AI docs for the most recent pricing and quotas. Google Developers BlogGoogle Cloud

How creators are testing Veo 3 — early impressions

Hands-on reviews and tutorials published since Veo 3’s release show impressive outcomes for imaginative scenes (aliens, stylized creatures, environmental shots) and image-to-video conversions that add life to photographs. But reviewers also note glitches when scenes require nuanced human interaction — faces, lip sync for complex dialogue, and precise choreography remain the hardest challenges. The consensus: Veo 3 shifts the bar for usable, cinematic short clips, but human oversight and editing remain essential for high-stakes or long-form projects. Tom’s GuideYouTubeArtificial Intelligence News

Tips for getting the best results with Veo

If you’re planning to experiment with Veo, these practical tips will save time:

-

Be specific in prompts: describe camera angles, lighting, mood, and movement (e.g., “medium close-up, golden hour, slow dolly out, ambient rain”). The model responds to cinematic direction. blog.google

-

Limit characters & complexity: fewer moving elements yields fewer artifacts; single-subject scenes often look better than dense crowd scenes. Tom’s Guide

-

Iterate fast: use Google AI Studio’s preview to tweak prompts and parameters before committing to higher-fidelity or paid renders. Google Cloud

-

Post-process selectively: even great outputs benefit from light editing — color grading, audio cleanup, and minor frame fixes improve polish. blog.google

What Veo means for the industry (and creators)

Veo’s arrival is both disruptive and enabling. For small creators and marketers it lowers production cost and time to market for short video — a powerful advantage in attention-driven social ecosystems. For agencies and studios, Veo accelerates ideation and previsualization. For platforms and regulators, it heightens the urgency of detection, provenance and policy work to limit harms. In short: we’re likely to see a surge of AI-assisted short video content, new creative formats and workflows, and parallel growth in tools and policies to manage authenticity and rights.

The competitive landscape: where Veo sits

Veo is one of several leading video models emerging from big tech and startups. Each model trades off scale, fidelity, speed, and integrated tooling. Google’s edge with Veo is deep integration into its ecosystem (Vertex AI, Gemini, Google AI Studio), native audio generation, and a focus on cinematic quality backed by substantial compute and research investment. That doesn’t mean Veo is the final word — other players iterate rapidly — but Google’s ecosystem approach makes Veo particularly attractive for enterprises and creators already within Google Cloud and Gemini environments. Google Cloud+1

Final thoughts: practical optimism

Veo 3 is a milestone in generative video: it brings native audio, strong cinematic capabilities and broad platform integration that make short-form AI video practical for many real-world use cases. Creators should approach Veo with curiosity and caution: it unlocks creative possibilities and cost savings, but also requires ethical attention and human oversight. As with earlier leaps in AI, the smartest strategy is to combine human craft with Veo’s speed — use the model to scale ideation, then apply human judgment for the final, publishable output.

If you want, I can:

-

Turn this article into a ready-to-publish blog post with meta description, tags and permalink optimized for your site.

-

Produce a short “how-to” tutorial showing step-by-step examples of prompts that work well with Veo 3 (including screenshots and example outputs).

Which of those would you like next — or should I publish the article formatted for your blog (H1, subheads, and SEO meta included)? Google Developers BlogGemini

Sources (selected)

Google DeepMind model page; Google Cloud & Vertex AI docs; Gemini product pages; Google developer blogs announcing Veo/Veo-3; reviews and hands-on writeups (The Verge, Tom’s Guide) and recent reporting on AI-generated video trends. Google DeepMindGoogle Cloud+2Google Cloud+2GeminiThe VergeTom’s Guide

https://bitsofall.com/https-www-yourwebsite-com-what-is-strong-ai/

AI for Online Spending Habits: How Artificial Intelligence is Transforming the Way We Shop and Save